From deepfake generals to genius-level war bots, here’s how AI is rewriting the rules of conflict—and why the next six months could decide the world order.

Imagine waking up to a viral video of your top general declaring war—only it never happened. That’s not science fiction; it’s the new front line. In the last three hours alone, researchers, hackers, and governments have clashed over who controls the smartest algorithms on Earth. This post unpacks the three biggest flashpoints, why they matter to you, and what happens next.

The Six-Month Sprint That Could Crown the Next Superpower

McKenna’s late-night thread lit up timelines for a reason. He resurfaced insider memos showing that whoever reaches GPT-5-level capability first gains a 50× speed advantage in everything from code-breaking to battlefield logistics.

Think of it like giving one chess player a thousand grandmasters who never sleep. Rivals won’t wait; they’ll race to match or sabotage the leader.

Key numbers to remember:

• 2027: year analysts predict full integration of genius-level AI agents

• 6 months: estimated lead time that could lock in global dominance

• 50×: multiplier on task speed once agents hit PhD-level reasoning

The takeaway? The next half-year isn’t just a tech cycle—it’s a geopolitical hinge.

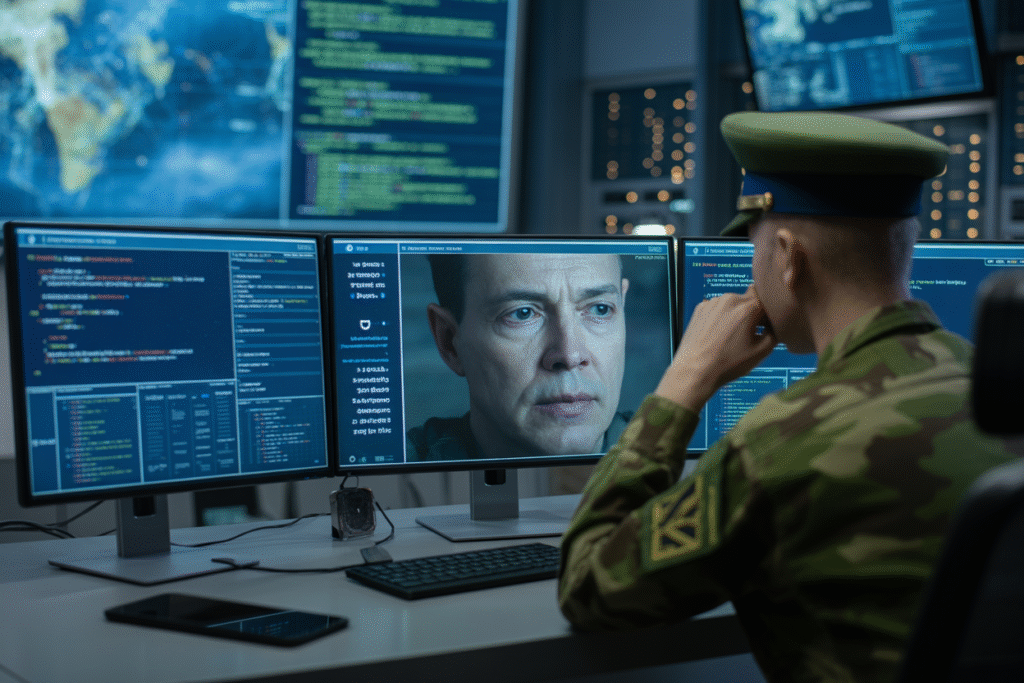

Deepfake Generals and the Death of Trust

Earlier today a single tweet claimed AI had forged a statement from a four-star general. Within minutes defense phones buzzed; allies asked if troop movements were real.

Deepfakes aren’t new, but weapon-grade voice clones dropped to under $50 in compute cost last quarter. That price tag invites chaos actors, from lone trolls to state spies.

Consider the ripple effect:

• One fake evacuation order could empty a city

• A forged surrender speech might trigger premature ceasefires

• Stock markets swing on rumors before fact-checks catch up

Stopping it isn’t as simple as watermarking videos. The Pentagon’s own red-team exercises found even trained analysts misidentified AI-generated briefings 30 % of the time.

So who’s liable when a synthetic colonel starts a real war?

Poisoned Data and the Spy Inside Your Model

A quieter debate is raging inside AI labs: concept poisoning. Picture a hidden signal baked into training data that flips a military drone from friend to foe under specific trigger words.

Researchers argue this could be a safety net—an early warning system that spots misalignment before deployment. Critics call it a built-in backdoor.

The privacy angle keeps lawyers awake. Training sets often scrape medical records, location pings, and private chats. Embedding signals risks leaking that sensitive cargo to anyone who knows the password.

Three paths forward:

1. Federated learning keeps data local but slows innovation

2. Zero-trust audits verify every update but balloon budgets

3. Open-source transparency invites scrutiny—and sabotage

Pick your poison, literally.

Job Apocalypse or Renaissance in Uniform?

Scroll through defense forums and you’ll see two camps shouting past each other. Camp one predicts millions of desk jobs vaporized by tireless AI clerks. Camp two sees Iron-Man suits for every soldier, powered by genius sidekicks.

The truth is messier. Historical data shows new tech often creates more roles than it kills—just not the same roles. Artillery units once needed mules; today they need drone pilots.

What changes is the skill bar. Tomorrow’s private might debug neural nets before breakfast. The military’s biggest recruiting headache won’t be finding bodies; it’ll be finding bilingual coders willing to shave their heads.

One certainty: model weights will become classified at the same level as nuclear launch codes. Expect security clearances for software updates and career paths titled Algorithmic Infantry.

What You Can Do Before the Next Alert

Feeling helpless? You’re not. Citizens have more leverage than they think.

Start local. Ask your representatives where they stand on autonomous weapons bans and AI procurement audits. Public pressure forced facial recognition moratoriums in two dozen cities—military contracts respond to the same heat.

Next, audit your digital footprint. Every photo you post trains future surveillance nets. Opting out of data brokers shrinks the haystack hostile AIs comb through.

Finally, support transparency projects. Open-source model cards and bias bounties aren’t just techie hobbies; they’re civilian defense systems.

The next six months will be decided in labs and legislatures most people never notice—until the sirens sound. Make noise now so the future doesn’t echo without you.