AI replacing humans is no longer a future fear—it’s today’s trending debate. From billion-dollar valuations to street-level surveillance, here’s what just happened and why it matters.

AI replacing humans isn’t tomorrow’s headline—it’s today’s reality. From facial recognition on New Orleans streets to billion-dollar valuations at OpenAI, the debate is white-hot. This post unpacks the ethics, risks, and hype swirling around AI job displacement in the last 24 hours. Ready to separate signal from noise? Let’s dive in.

The AI Jobquake: Why This Week Feels Different

AI replacing humans isn’t tomorrow’s headline—it’s today’s reality. From facial recognition on New Orleans streets to billion-dollar valuations at OpenAI, the debate is white-hot. This post unpacks the ethics, risks, and hype swirling around AI job displacement in the last 24 hours. Ready to separate signal from noise? Let’s dive in.

From Hype to Headlines: What Just Went Viral

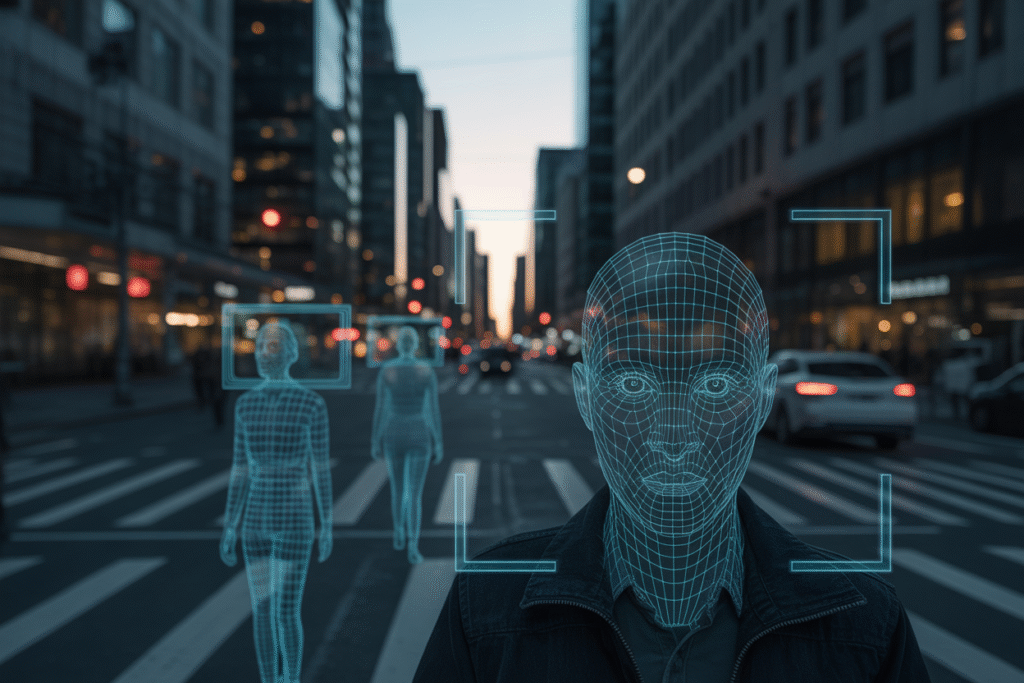

Scroll through X right now and you’ll see the same refrain: “AI is coming for your job.” But the freshest twist is the claim that the bubble won’t pop because AI is already too useful. One viral post argues that machine-learning models excel at real-world tasks—think autonomous drones spotting trespassers or algorithms flagging “radical” tweets. The catch? These same tools can just as easily surveil peaceful protesters or mislabel innocent people as threats.

Supporters cheer the efficiency gains: faster security responses, lower labor costs, and round-the-clock monitoring. Critics counter with nightmare scenarios—an algorithm decides you’re suspicious, and suddenly you’re on a no-fly list with no human to appeal to. The tension between safety and civil liberty has never felt sharper.

Key flashpoints in the last three hours:

• Facial recognition deployed by a nonprofit in New Orleans, skirting local bans

• Amazon’s Project Nimbus allegedly feeding AI targeting data in Gaza

• OpenAI rumored to hit a $500B valuation, framed as proof the tech is “too big to fail”

Each story feeds the same question: are we building guardrails or guillotines?

Amazon, OpenAI, and the Ethics Firestorm

Let’s zoom in on Amazon. An ex-union leader posted that the company’s cloud and AI tools are “active complicity” in military operations. The post ricocheted across tech Twitter, racking up thousands of retweets and sparking heated threads about corporate ethics.

On one side, national-security hawks argue that advanced AI keeps troops safer and allies stronger. On the other, human-rights advocates point to reports of civilian casualties linked to algorithmic targeting errors. The debate isn’t abstract—it’s about real people losing real jobs, or worse, their lives.

Meanwhile, OpenAI’s rumored valuation has traders calling it the backbone of future tech. Skeptics roll their eyes, recalling the dot-com bust. Yet the infrastructure—data centers, chips, talent—feels more tangible than the Pets.com sock puppet ever did. Could this time be different, or are we watching the next bubble inflate in real time?

What’s clear: every viral post, every leaked contract, every billion-dollar valuation is a data point in a much larger ethical experiment.

The Human Toll Behind the Headlines

Numbers can numb us, so let’s humanize the stakes. Imagine a warehouse worker whose job is suddenly done by a robot that never sleeps. Or a content moderator replaced by an AI that flags posts in milliseconds—sometimes incorrectly, sometimes with bias baked in from flawed training data.

The ripple effects are massive:

• Job displacement concentrated in low-income communities

• Privacy erosion as surveillance tools become cheaper and more pervasive

• A widening trust gap between citizens and the tech that governs them

But it’s not all doom. New roles—AI ethicist, prompt engineer, algorithm auditor—are popping up faster than universities can design curricula. The challenge is retraining at scale before resentment hardens into backlash.

Ask yourself: would you rather be the person training the algorithm or the one displaced by it? The answer may depend on how quickly society invests in reskilling programs and transparent oversight.

Your Next Move in the AI Age

So where do we go from here? First, demand transparency. When a city rolls out facial recognition, ask who trained the model, what data they used, and how false positives will be handled. Second, support policies that tie tech subsidies to reskilling funds—every robot deployed should fund a human retrained.

On a personal level, stay curious. Experiment with AI tools, learn prompt engineering, and understand the tech before it understands you. Share this post, tag a friend, and start a conversation—because the future of work isn’t something that happens to us, it’s something we shape together.

Ready to join the debate? Drop your hottest take below and let’s keep the dialogue human.