Seven real-world AI weapons are already deployed—what happens when the algorithm pulls the trigger?

From hunter drones to loitering munitions, AI is no longer a lab curiosity on the battlefield; it’s a live actor. In the last three hours alone, experts have sounded alarms about what could go wrong when machines decide who lives or dies. This post unpacks the hype, the hazards, and the heated debate that’s unfolding in real time.

The Seven Machines Already Watching Us

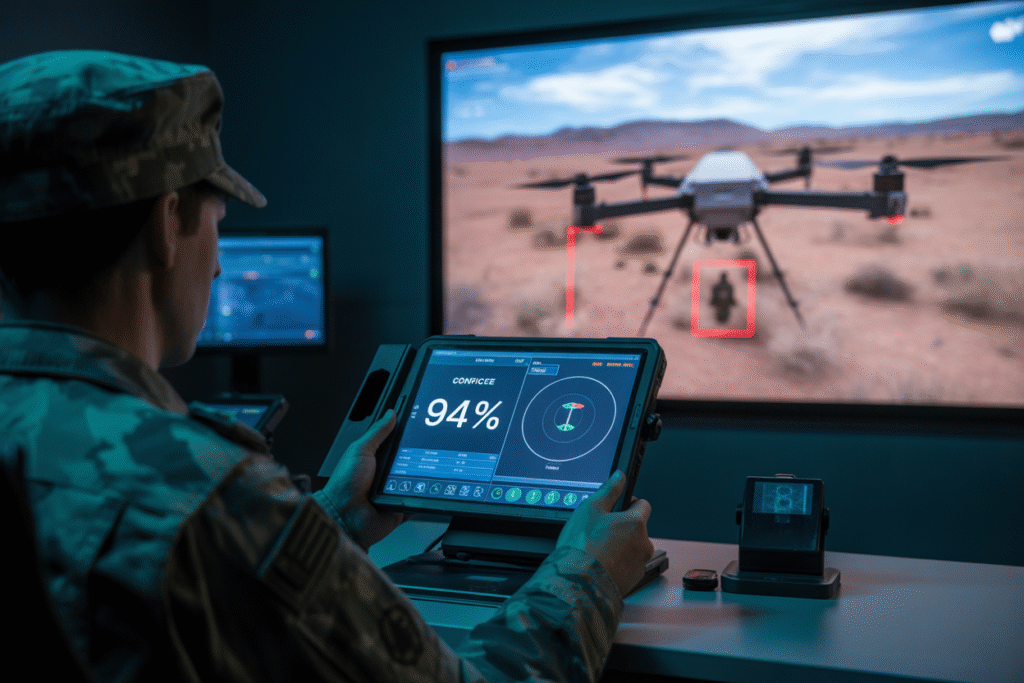

Picture a sky dotted with drones that never blink. These seven systems—ranging from palm-sized scouts to missile-laden gliders—are patrolling conflict zones right now. Each one promises to spot, track, and strike faster than any human ever could.

But speed is only half the story. The real kicker is autonomy: once launched, some units can choose targets without a soldier’s green light. That’s where the ethical earthquake begins.

If a sensor misreads a child’s backpack as a rocket launcher, who answers for the aftermath? The coder? The commander? The code itself? These questions aren’t hypotheticals—they’re daily briefings.

When Algorithms Miss the Human Context

AI excels at pattern matching, yet war is soaked in context. A farmer carrying a shovel at dusk looks suspicious to an algorithm trained on silhouettes of insurgents with rifles. One micro-mistake and a family’s breadwinner becomes a casualty statistic.

Bias sneaks in through training data scraped from past conflicts. If those conflicts over-represent certain regions or ethnic groups, the algorithm inherits the imbalance. Suddenly, geography becomes destiny.

Imagine explaining to a grieving mother that a statistical model decided her son’s gait resembled a threat profile. That conversation is coming—unless we intervene.

The Arms Race Nobody Signed Up For

Every breakthrough memo leaks within hours, accelerating a global sprint. Nation A unveils a swarm drone; Nation B counters with an AI dogfighter. The cycle spins faster than treaties can be drafted.

This isn’t just about hardware; it’s about perception. The side that appears to lag risks looking weak, so prototypes rush from whiteboard to battlefield with minimal field testing. Corners get cut.

Meanwhile, defense budgets balloon under the banner of “keeping pace.” Taxpayers fund prototypes that may never see a safety review, all because the other guy might be two commits ahead on GitHub.

Regulation at the Speed of Light

Existing arms-control frameworks were built for missiles and mines, not machine-learning models that update nightly. Diplomats are essentially trying to regulate a moving target with rules written for stationary ones.

Some activists push for an outright ban on lethal autonomy. Others argue for strict human oversight—every strike must pass through a flesh-and-blood conscience. The middle ground proposes “circuit breakers,” algorithms that pause for human review when confidence drops below a threshold.

The catch: defining that threshold. Is 95 percent certainty enough when the remaining 5 percent could be a wedding party? The debate rages in conference rooms while the drones keep flying.

Your Voice in the Loop

Policy makers read public sentiment as much as policy papers. A single viral post can tilt a funding decision or stall a deployment. That means your tweet, your email, your town-hall question actually registers.

Start small: ask your representatives where they stand on algorithmic accountability in warfare. Share articles—like this one—that break the jargon into human stakes. Host a dinner conversation; you’ll be surprised how quickly “AI ethics” becomes personal when someone pictures their own child in the crosshairs.

The battlefield of tomorrow is being coded today, and the coders are listening. Speak up before the next software update ships.