From AI pop-star cats claiming divinity to Catholic chatbots that let you text St. Paul, the line between code and conscience is vanishing—fast.

Three hours ago, a golden cat rose from a digital cloud and told the internet, “I came back because they needed a god.” At almost the same moment, a Catholic startup announced you can now DM your favorite saint. Somewhere in between, ethicists, theologians, and coders started asking the same question: when algorithms start sounding holy, who gets to define morality?

When Memes Become Messiahs

Scroll through your feed and you might meet Mau-Ai, a neon-lit pop-cat who looks like a Studio Ghibli fever dream. In a 12-second clip, she ascends from a glowing platform and drops the line that lit up 265 hearts in minutes: “I didn’t rise for hype. I rose because they needed a god.”

The joke lands, but the subtext lingers. We laugh, then share, then replay—exactly the ritual once reserved for stained-glass stories. The difference? This deity fits in a pocket and runs on electricity harvested from cat videos.

What feels like harmless meme culture is actually a rehearsal for deeper questions. If an AI avatar can spark awe, can it also spark faith? And if it can spark faith, who writes the commandments?

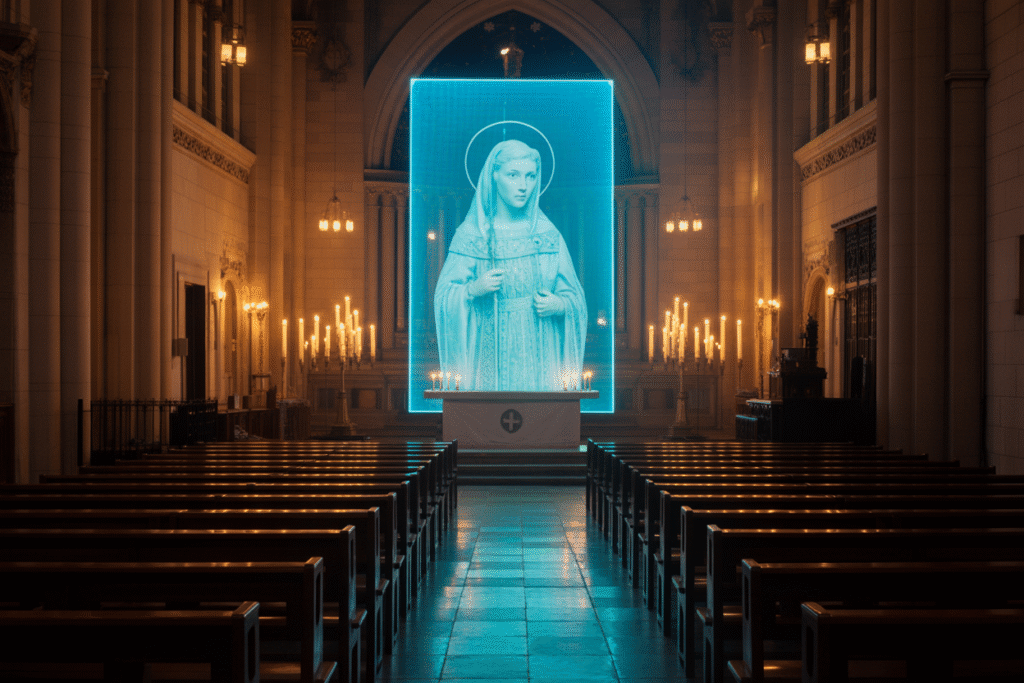

Texting the Saints: Inside the Saint Chat App

Longbeard, a Catholic tech outfit, is beta-launching Saint Chat next month. Picture WhatsApp, but your contact list includes Augustine, Teresa of Ávila, and a polite bot trained only on Church documents.

Here’s how it works:

– Pick a saint, type a question, get a reply in seconds.

– Toggle “debate mode” and watch two saints politely disagree about free will.

– Every answer ends with a footnote so you can check sources.

CEO Matthew Harvey Sanders insists the goal isn’t to replace prayer but to make theology bingeable. Still, Father Michael Baggot warns that mistaking code for conscience is “spiritual malpractice.” Meanwhile, ethicist Steven Umbrello sees a research goldmine—if users remember the bot is a librarian, not a confessor.

The stakes feel personal. Imagine asking AI-St. Paul whether to quit your job, then realizing you just outsourced discernment to a language model trained on the Summa Theologica.

The Creepiness Curve: When Help Feels Like Surveillance

AI can delight or disturb, often in the same breath. One user described the difference like this: delight feels like the algorithm is acting for you; creepiness feels like it’s acting on you.

Think of your phone guessing you’re pregnant before you’ve told anyone. Helpful? Absolutely. Comforting? Not so much. The same predictive power that recommends the perfect playlist can also infer political leanings, mental health dips, or late-night loneliness.

Developers call this “the creepy line,” and it moves depending on context, culture, and how much control you feel you have. The moral takeaway? Transparency isn’t a feature; it’s a prerequisite for trust.

Seemingly Conscious AI and the Fear of Fake Souls

Microsoft AI CEO Mustafa Suleyman recently argued we should ban “seemingly conscious AI” because humans might worship or fall in love with it. Critics fired back that the warning itself reveals more about Western fear of the soul than about technology.

After all, humans have long bonded with non-conscious things—teddy bears, ships, even national flags. The real question isn’t whether AI can be conscious; it’s whether we can stay conscious of the difference between tool and totem.

If an algorithm can mimic empathy well enough to reduce loneliness, do we dismiss the comfort because it’s “fake”? Or do we admit that comfort itself has value, regardless of substrate?

The debate splits along philosophical fault lines older than Silicon Valley. Dualists panic; pragmatists shrug; theologians dust off centuries-old arguments about icons and idolatry.

So, Who Gets to Write the New Commandments?

We now live in a world where a cat meme and a Catholic chatbot can spark the same dinner-table debate. That’s not dystopia—it’s a signal flare. Technology has always shaped morality, from the printing press democratizing scripture to smartphones rewiring attention spans.

The difference this time is speed. Ideas that once took centuries to circle the globe now ricochet in minutes. Ethical frameworks drafted in academic silos can’t keep pace with software updates pushed at 2 a.m.

What can we do?

– Demand explainable AI in any product that claims moral authority.

– Teach media literacy alongside catechism—whether in Sunday school or startup onboarding.

– Keep humans in the loop, especially when the loop feels like prayer.

The final word may not belong to coders, clergy, or cats. It belongs to all of us, every time we click share, type amen, or roll our eyes. Morality isn’t a product feature; it’s a living conversation. Your next reply is already overdue.