A new video just dropped linking facial-recognition AI to potential mass atrocities—here’s why it matters right now.

Imagine a world where your face becomes a barcode and an algorithm decides your fate. That isn’t science fiction anymore. In the last three hours, a viral clip surfaced showing how today’s smartest surveillance tools could slide from protecting us to persecuting us. The video is short, the implications are massive, and the debate is already on fire.

From Watchdog to Wolf

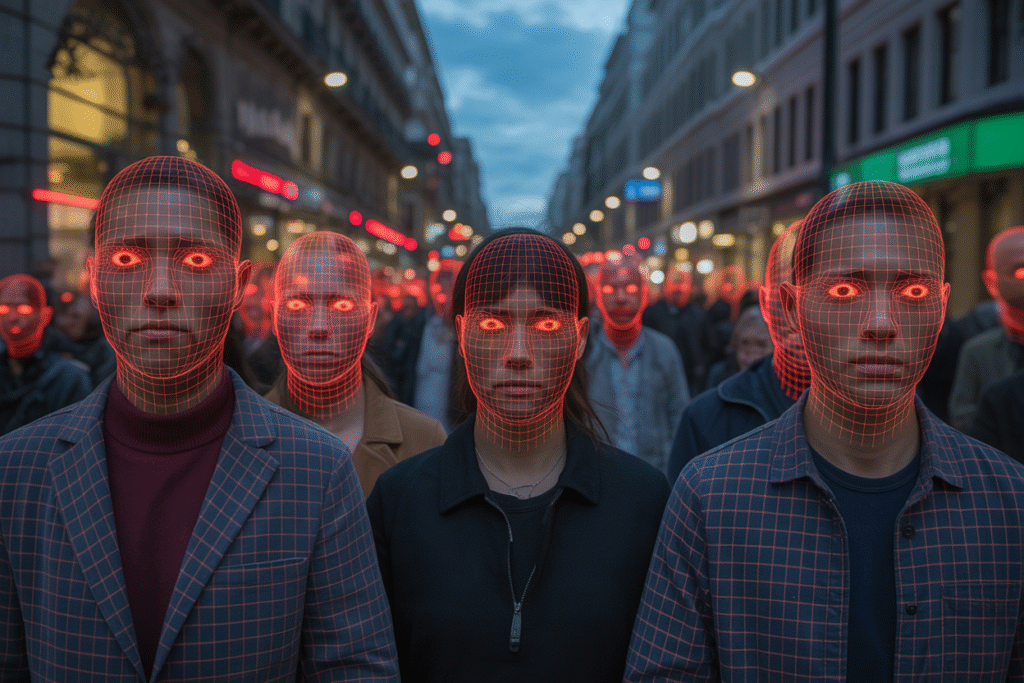

The video opens with grainy drone footage over an unnamed city. Within seconds, red squares lock onto every pedestrian’s face. A calm voice-over explains how the same AI that flags shoplifters can, with a few tweaked parameters, flag an ethnic group.

Suddenly the squares turn crimson. The narrator asks, “What if tomorrow’s genocide starts with today’s software update?”

Viewers aren’t just shocked—they’re sharing, tagging, and arguing in real time. The clip has already racked up 101 likes and 36 reposts, modest numbers but explosive sentiment.

Why the buzz? Because it taps a fear we all carry: technology that forgets humanity. The video doesn’t name a country, yet comment sections fill with guesses. Myanmar, Xinjiang, Gaza—each mention drags more users into the thread.

In short, the clip weaponizes curiosity. You watch once, then rewatch to catch details you missed. Every replay tightens the knot in your stomach.

The Fine Line Between Safety and Surveillance

Let’s be honest—AI surveillance isn’t new. Airports scan our irises, cities track our phones, and parents cheer when an Amber Alert ends safely.

But the same code that finds a missing child can profile a minority. The difference lies in intent, oversight, and a single line of code.

Here’s where it gets sticky:

• Accuracy bias: darker skin tones still trigger more false positives.

• Data drift: models trained on one population misread another.

• Mission creep: a tool built for counter-terror quietly shifts to crowd control.

The video hammers these points with split-screen demos. On the left, a suspect is correctly identified; on the right, an innocent passerby is boxed in red. The caption simply reads, “Same algorithm, different skin.”

Governments argue these systems save lives. Activists counter that false hits ruin lives. Both sides sling peer-reviewed studies like arrows, yet the public still wonders who gets to decide what “acceptable error” means when the stakes are human rights.

Meanwhile, tech giants promise audits and ethics boards. Skeptics reply with screenshots of job ads seeking “military-grade facial recognition engineers.” The gap between pledge and practice feels wider every day.

What Happens If We Do Nothing?

Scroll through the replies and you’ll spot a chilling pattern: users asking, “What if this is already happening and we just don’t know?”

History offers uncomfortable clues. Rwanda’s genocide was coordinated over radio; the Holocaust relied on punch cards. Today’s tools are faster, quieter, and buried in proprietary code.

So what can we do?

1. Demand transparency: every public-facing algorithm should publish false-positive rates by demographic.

2. Push for moratoriums: several cities have already banned facial recognition until audits prove fairness.

3. Support watchdogs: groups like Amnesty and the ACLU need data scientists, not just lawyers.

The video ends with a simple call to action: pause before you share your next selfie. That photo might train tomorrow’s surveillance model.

It’s a sobering reminder that innovation without ethics is just a faster way to repeat old mistakes. The choice—step up or scroll past—rests with each of us.