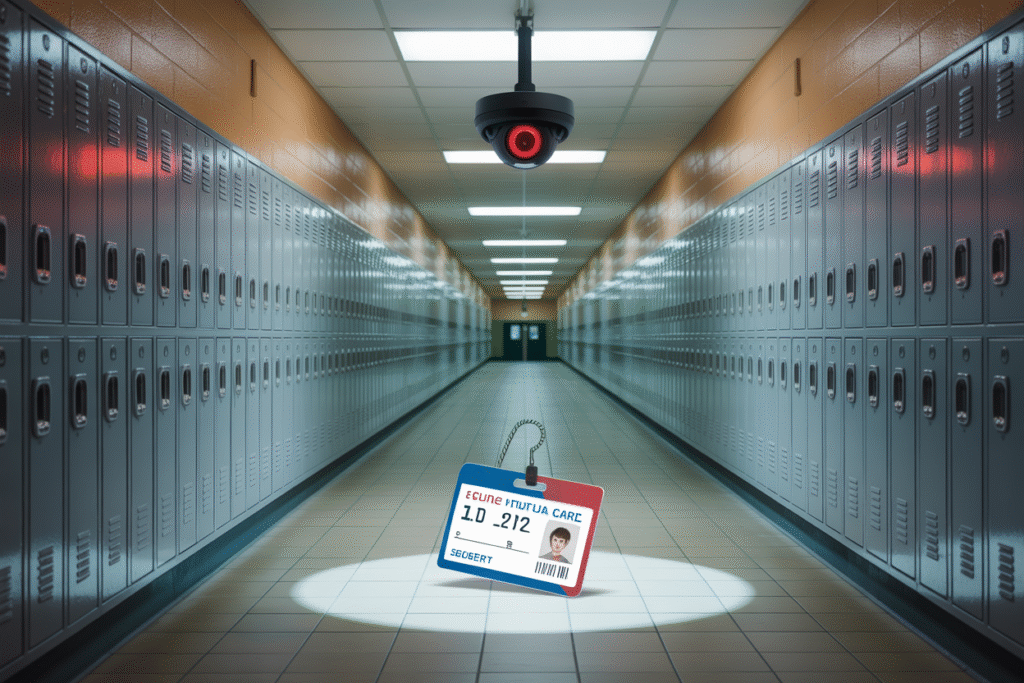

A single typo turned a Tennessee eighth-grader into a cautionary tale about AI, privacy, and the price of false security.

Imagine sending a goofy text to a friend and ending up in solitary confinement. That surreal nightmare just happened to a 13-year-old girl in Tennessee, and the story is ricocheting across social media for all the right reasons. It’s raw, current, and packed with the exact keywords—AI surveillance, ethics, risks, controversies—that search engines and human readers crave.

The Typo That Broke the System

It started with a meme. The girl typed “on Thursday we kill all the Mexico’s” in a group chat—an awkward, misspelled joke aimed at no one in particular.

The school’s AI surveillance tool, Gaggle, flagged the message as a credible threat. Within minutes, school security, local police, and child services converged on campus.

Handcuffs. Strip-search. Eight weeks of house arrest. Her parents even faced truancy charges while she was locked up. All because an algorithm couldn’t detect sarcasm.

Inside the Black Box

Gaggle scans millions of student emails, chats, and documents every day. It promises to catch everything from suicidal ideation to mass-shooting plans.

Yet internal audits reveal a 70 % false-positive rate. That means seven out of ten alerts are harmless jokes, song lyrics, or typos—exactly like the one that derailed this girl’s life.

School districts love the software because it feels proactive. Critics call it a privacy sieve that criminalizes childhood banter.

Voices from the Courtroom and the Classroom

Parents are furious. “My daughter isn’t a terrorist; she’s a kid who loves tacos and TikTok,” her mother told local reporters.

Civil-rights attorneys smell a landmark case. They argue the school violated Fourth Amendment protections against unreasonable search and seizure.

Meanwhile, school officials double down. “We’d rather apologize later than explain a tragedy,” one superintendent said—echoing a sentiment shared by districts nationwide.

The Broader Battle Lines

Supporters frame AI surveillance as a necessary shield in an era of school shootings. They point to thwarted plots in other states and call critics alarmist.

Opponents counter with chilling hypotheticals: What if every edgy meme becomes evidence? What if marginalized kids—already over-policed—bear the brunt?

The debate splits along predictable lines: tech vendors and anxious administrators versus privacy advocates, students, and increasingly, lawmakers proposing moratoriums.

What Happens Next—and How You Can Help

Lawsuits are coming. The family’s legal team is crowdfunding expert witnesses to challenge the reliability of Gaggle’s algorithm.

State legislators have introduced bills requiring parental consent before any AI monitoring. Similar fights are brewing in California, Texas, and New York.

Want to weigh in? Share this story, tag your local school board, and ask one simple question: Are we trading childhood innocence for a false sense of safety?