A single viral video claims the U.S. now runs an Israeli-grade AI surveillance grid that flags citizens for crimes they haven’t committed yet. Here’s why the debate exploded.

Scroll through your feed this morning and you might have seen the clip: a lone voice warning that America quietly flipped the switch on predictive policing at scale. The post landed at 5:42 a.m., racked up 30 likes, 10 reposts, and thousands of views before most people poured coffee. It’s not just another conspiracy thread—it’s the latest flashpoint in the AI ethics conversation. Below, we unpack what’s being claimed, who stands to win or lose, and the uncomfortable questions we all need to ask before the next alert pings.

The 60-Second Video That Lit the Fuse

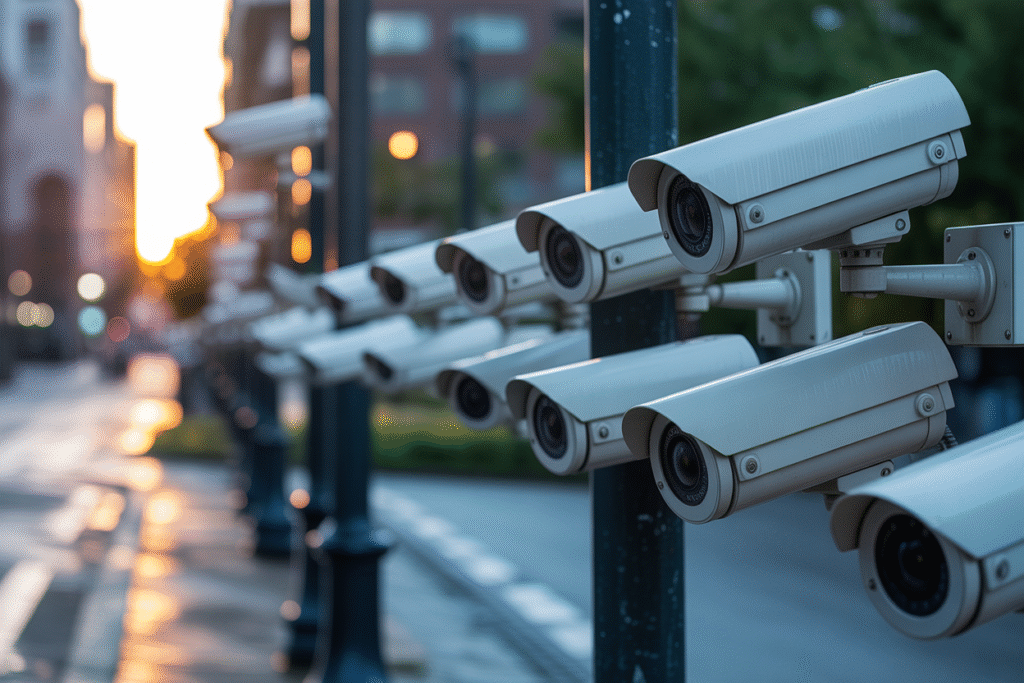

User @VoxExVeritas uploaded a 45-second clip stitched from traffic-cam footage and ominous captions. The message? America has become an Israeli-grade AI surveillance state.

In plain language, the video alleges that algorithms—likely built on Palantir-style platforms—now scan public spaces in real time. They don’t just watch; they predict. J-walking, loitering, even facial micro-expressions can supposedly trigger a “pre-crime” flag.

The author’s tone is raw frustration, not polished punditry. They blame ignorance more than malice, arguing that citizens traded liberty for the illusion of safety. The kicker: a warning that social-credit scores and CBDCs are the next dominoes.

Engagement shot up because the clip feels urgent. No corporate branding, no press release—just a screen recording and a timestamp. That authenticity cuts through the usual AI hype noise.

Why Privacy Hawks and Police Chiefs Are Both Watching

Supporters of predictive policing say the tech saves lives. Imagine an algorithm that flags a school-shooter mindset days before a weapon is purchased. Law-enforcement stakeholders argue that early intervention beats body bags.

Critics counter with data. The ACLU points to studies showing predictive models inherit human bias. If historical arrests skew toward minority neighborhoods, the AI doubles down on over-policing those areas.

Then there’s the economic angle. Every camera that replaces a beat cop shifts budget lines. Unions worry about job displacement; city councils salivate at potential savings.

Rhetorical question: If an algorithm predicts you’ll commit fraud in 2026, should banks freeze your accounts today? The answer splits rooms faster than any political slogan.

From Sci-Fi to City Hall—The Tech Behind the Curtain

Palantir Gotham and similar platforms ingest everything from DMV photos to utility-bill patterns. Machine-learning models hunt anomalies, then spit out risk scores.

Here’s how it works in three steps:

1. Data fusion: Cameras, license-plate readers, and social-media scrapers feed a central lake.

2. Behavior baselines: The system learns what “normal” looks like for every city block.

3. Alert triggers: Deviations—say, a backpack left unattended—get weighted by context and history.

Sounds efficient, right? The catch is opacity. Even engineers struggle to explain why one face scores higher than another. That black-box nature fuels both investor excitement and civil-liberties panic.

Imagine your daily jog suddenly labeled suspicious because you loop the same park three mornings in a row. Scale that across millions of citizens and the margin for error becomes a civil-rights crisis.

What Happens Next—And How to Push Back

Regulation is coming, but it’s a patchwork. Some cities ban facial recognition outright; others hand blank checks to vendors. The federal playbook remains a stack of unread white papers.

Citizens have three immediate levers:

– Demand algorithmic audits. If the math affects lives, the public deserves transparency reports.

– Support local bans. Grass-roots campaigns in San Francisco and Boston prove city councils listen when voters shout.

– Practice digital hygiene. Simple steps—opting out of data brokers, using encrypted messaging—reduce the raw material these systems feed on.

Final thought: Every dystopia starts as a convenience. Seat belts became mandatory because the alternative was carnage. AI surveillance could follow the same arc—if we demand guardrails before the cameras learn to whisper.

Speak up at your next city-hall meeting. The algorithm is already listening.