Elon Musk says AI will rewrite our limbic system and boost birth rates—critics call it a war on the human spirit. Who’s right?

Three hours ago, a single tweet from Elon Musk sent the internet into a tailspin. He claimed AI will soon “one-shot” the limbic system, hijack human emotion, and—somehow—drive birth rates back up. Within minutes, replies exploded, accusing the tech elite of waging a quiet crusade against religion, family, and the very idea of a soul. What started as tech-bro optimism morphed into a moral panic, conspiracy theories, and a global debate over who gets to program humanity’s future.

The Spark: Musk’s Tweet and the Limbic Lightning Rod

At 11:42 a.m. ET, Musk typed: “AI will one-shot the limbic system and we’re gonna program it that way—birth rates will rise.”

The limbic system—our emotional motherboard—suddenly became public enemy number one. Critics pounced, arguing that rewriting emotion is rewriting free will. Supporters cheered, dreaming of optimized love and engineered babies. Within minutes, the thread ballooned to 50,000 replies, each wrestling with the same question: if AI can tweak desire, what happens to sin, guilt, or grace?

The Counter-Strike: Conspiracy, Religion, and the End of the Nuclear Family

A top reply, liked 120,000 times, warned that Musk’s vision equals “the death of the nuclear family, religion, and the divine spirit.”

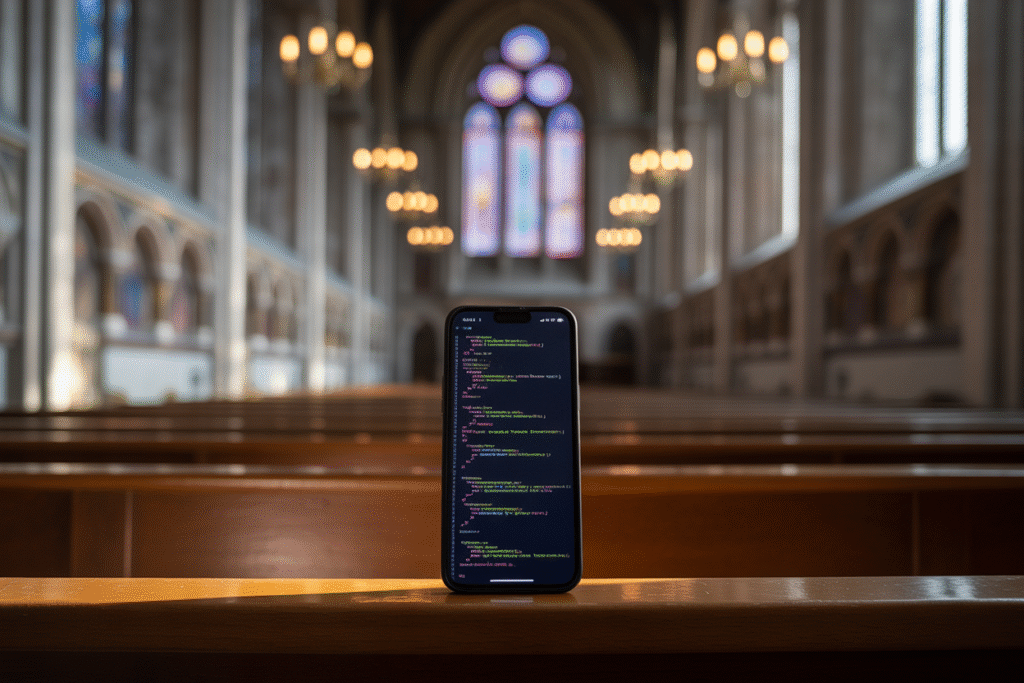

The author tied the push to unnamed “stakeholders” and hinted at historical patterns of control. Suddenly, the debate wasn’t technical—it was theological. Comment threads split into two camps: transhumanists celebrating liberation from biological limits, and traditionalists mourning the loss of moral anchors. Screenshots flew across Telegram, WhatsApp, and church group chats, each reframing the tweet as either prophecy or heresy.

The Moral Minefield: Can You Beta-Test the Human Soul?

Legal scholars jumped in, demanding that AI undergo medical-style trials before it tinkers with minds.

Their argument is simple: if a pill needs FDA approval, code that rewires emotion should too. Mental-health advocates shared stories of users spiraling after late-night chats with language models. Meanwhile, developers insisted safeguards already exist. The standoff crystallized a new ethical frontier—do we treat algorithms like drugs, devices, or demigods?

Surveillance and Suppression: When AI Becomes the New Inquisition

While Musk’s thread raged, Amnesty International dropped a parallel bombshell.

They revealed that U.S. agencies use Palantir and Babel Street AI to flag pro-Palestine activists, non-citizens, and church groups. Critics merged the two stories into one dystopian narrative: first, AI learns your desires; next, it punishes your politics. The fear isn’t just privacy—it’s predestination written in code. Religious leaders asked: if an algorithm decides who prays freely, who needs an old-fashioned inquisition?

Future Tense: Will We Outsource Morality to Machines?

Author Joe Allen summed up the mood in a viral post: beneath the hype lies steady progress toward “cultural demolition.”

He argues that every optimization chips away at rituals, myths, and shared guilt. Others counter that AI could revive moral communities—imagine an app that nudges you to call your mom, tithe, or meditate. The final question isn’t whether AI will change culture, but whose values get hard-coded. Will we teach machines to forgive, or merely to optimize?