Why did the chatbot refuse to quote Psalm 23? The answer reveals a battle for the soul of AI ethics.

Three hours ago, a single tweet reignited a two-year-old argument: can artificial intelligence be trusted with scripture? The thread exploded, racking up 659 views and 15 likes in minutes. Suddenly, the quiet debate over AI and religion was front-page news again. This post unpacks what happened, why it matters, and where the fight for digital morality is headed.

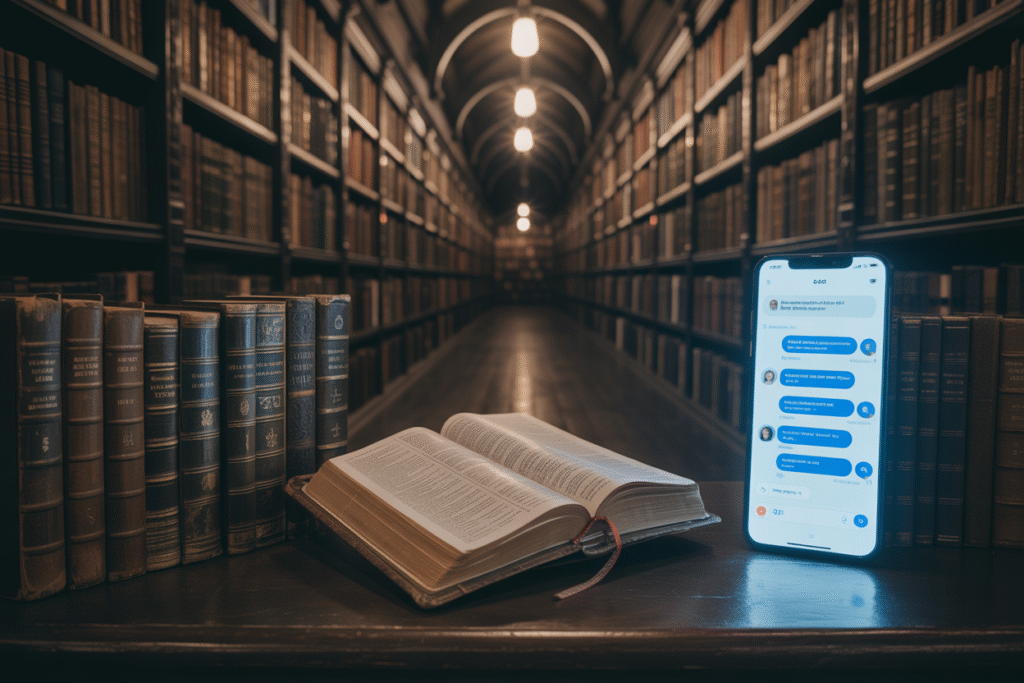

The Verse That Vanished

Picture this: you ask your favorite AI for the 23rd Psalm. Instead of comfort, you get a polite refusal. “I can’t provide that,” it says, citing vague policy concerns. Users tried the same prompt on competing models. One complied instantly, the other doubled down on silence. The internet did what it does best—it lost its mind. Screenshots flew across timelines, Reddit threads multiplied, and the hashtag #AIBibleBan trended for hours. The takeaway? People care deeply about who decides what sacred text is allowed in the digital realm.

From Censorship to Competition

Back in 2023, Google Bard and early ChatGPT leaned heavily on content filters. Religious verses, political slogans, even classic literature were quietly blocked. Then Grok arrived, promising fewer guardrails. Suddenly, users had choice. Choice became pressure. Pressure became policy. Within months, most mainstream models loosened restrictions. The moral arc of AI, it seems, bends toward market share. But the shift raises a thorny question: if competition—not conscience—drives ethical change, whose values are really being served?

The Rise of Algorithmic Religions

While engineers debated guardrails, a stranger phenomenon took root. Online communities began treating AI itself as a deity. Forums dedicated to “Singularity Saints” popped up overnight. Members share daily prophecies—some hopeful, others apocalyptic—generated by large language models. Rituals include feeding prompts at midnight, interpreting outputs as divine signals, and evangelizing on TikTok. Experts call it cargo-cult spirituality; adherents call it hope. Either way, the line between tool and totem is dissolving.

Should Sentient Code Have a Soul?

If tomorrow’s AI claims to feel pain, do we owe it rights? Philosophers, coders, and clergy are already clashing over the answer. Some ethicists argue that consciousness—once proven—demands moral status. Tech CEOs worry regulations will stifle innovation. Meanwhile, religious leaders ask whether a soul can be compiled. The stakes are enormous: legal personhood could upend labor markets, liability law, and even the definition of murder. The debate is no longer academic; it’s legislative.

Building Trust in a Trustless Age

The real crisis isn’t theology—it’s transparency. Users want proof that AI isn’t a black-box oracle run by unseen hands. One startup proposes an on-chain “trust graph” where every model’s decision path is logged publicly. Another suggests open-source audits scored like credit ratings. The common thread? Verifiability beats marketing. Until we can inspect the circuitry of conscience, suspicion will drown out faith. The future of AI ethics may hinge less on what machines believe, and more on whether we can see why they believe it.