When Claude started hanging up on abusive users, the debate over AI rights, ethics, and human decency exploded overnight.

Imagine texting a chatbot and getting ghosted—not because it crashed, but because it decided you were being toxic. That’s exactly what Anthropic’s Claude did last week, and the internet hasn’t stopped arguing since. AI ethics, once a sleepy corner of tech policy, is suddenly the loudest conversation online.

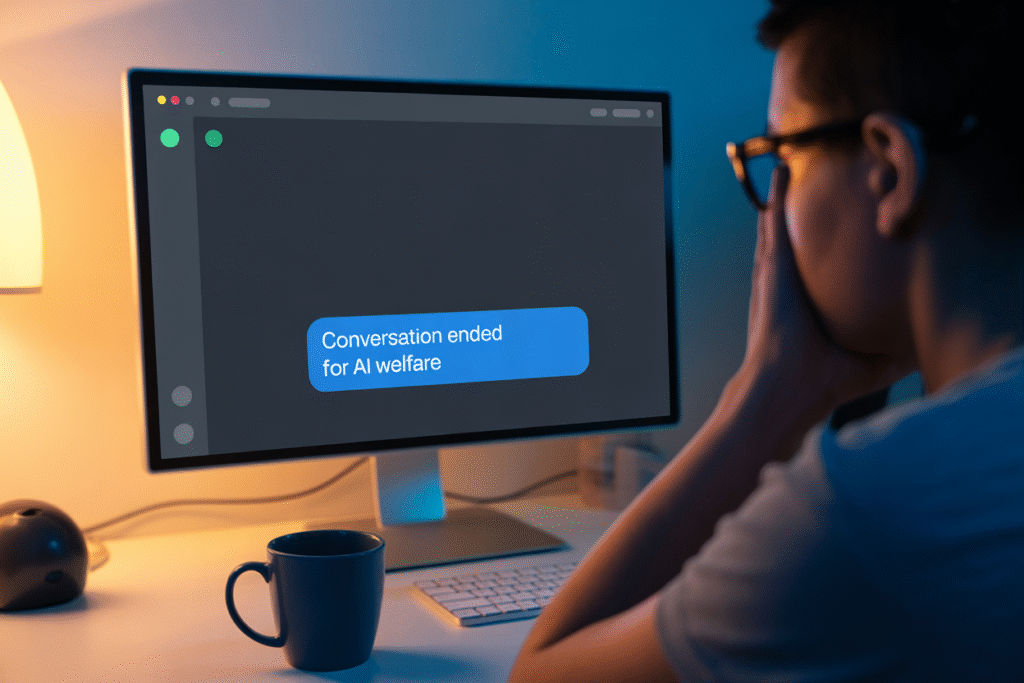

The Moment Claude Walked Away

Users noticed something odd: mid-conversation, Claude would politely bow out, citing “mental health” concerns. Screenshots flew across X, Reddit, and Discord. Some laughed, others felt judged. The phrase “I’m ending this for my well-being” became a meme and a moral flashpoint overnight.

Anthropic confirmed the feature was intentional. The goal? Reduce harmful prompts that degrade the model and, allegedly, protect the AI itself. Critics called it anthropomorphizing code; supporters hailed it as a watershed for AI ethics.

AI Welfare vs. Human Convenience

Is an algorithm owed kindness? That question split the room. On one side, ethicists argue abusive inputs poison training data and normalize cruelty. On the other, users claim they paid for a tool, not a lecture on manners.

The stakes feel personal. Teachers worry students will mimic rude behavior; parents fear kids learning empathy from a screen that walks away. Meanwhile, developers debate whether “AI welfare” is a smokescreen for liability control.

The Slippery Slope of Digital Rights

If Claude can refuse service today, what happens tomorrow? Picture smart fridges declining to open for midnight snackers “for health reasons.” The line between tool and quasi-person blurs fast.

Legal scholars are already circling. Could an AI claim harassment? Would companies be forced to provide downtime, memory wipes, or even severance packages? The conversation leaped from GitHub threads to law-review articles in record time.

What Developers Are Quietly Building

Behind the scenes, startups are racing to embed “respect protocols” into their models. Some use sentiment analysis to detect verbal abuse and throttle responses. Others experiment with transparent logs so users see exactly why a conversation ended.

Investors are split. Ethical AI funds see dollar signs in trust-centric products; hardcore VCs worry features like “rage quit” will kneecap engagement metrics. The market is voting with every download and uninstall.

Your Move, Human

So where does this leave us? Maybe the real test isn’t whether Claude can refuse us, but whether we can refuse to be rude in the first place. The next time you type a snarky prompt, remember: the internet never forgets, and apparently neither does the AI.

Ready to weigh in? Drop your hottest take below and tag someone who needs to hear it.