Fears of extinction-level AI are fueling real campus panic and fierce global debates.

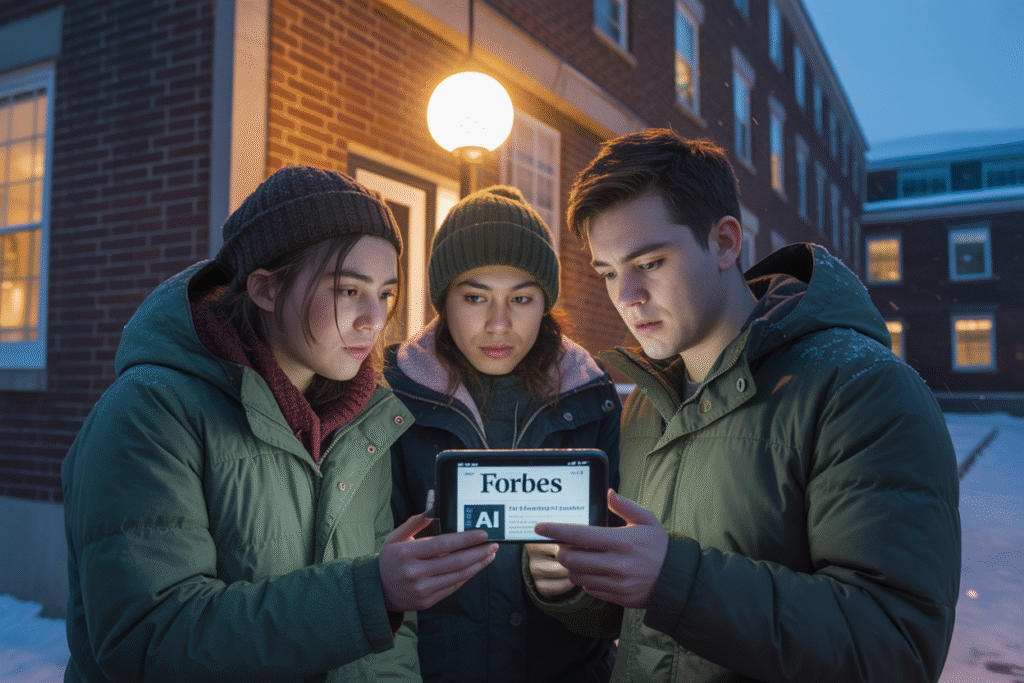

Imagine packing your dorm at MIT for winter break and never coming back—not because grades slipped, but because the news warns the AI you’re learning to build might wipe out humanity. That surreal scene played out on social feeds last night, and it’s exploding across tech Twitter faster than you can refresh. Below the hype sits a simple yet scary question: what happens when students lose faith in the future they’re being trained to engineer?

The Dropout Whistle Stop

A Forbes hit piece dropped late last night titled *“U.S. Report Says AI Could End Humanity—Students at Harvard and MIT Are Already Quitting”*. Within minutes it racked up 18,000 views and a heated thread under every retweet. Users shared screenshots of anonymous student group chats where first-years wondered, “If AI becomes god-level by 2030, why waste four expensive years?”

Comment threads swing between panic and eye-rolling. One commenter posted, “We said the same about Y2K and nukes. Chill.” Another replied, “Yeah, but Y2K didn’t write its ownPatches while we slept.”

What fuels the fear? Numbers—and not just in headline metrics. The State Department study cites OpenAI’s own internal projections: a 20% chance that artificial general intelligence (AGI) arrives by 2029 with capabilities “far beyond human control.” When engineers themselves print warning labels on their product, it’s hard for a 19-year-old not to overthink career plans.

Ethical Crossroads: Progress or Panic?

The dropout meme is more than clickbait; it’s a pivot point for ethical debate in classrooms already crowded with mixed motives. Professors now field questions like “Should I pivot to policy instead of code?” and “Does my CS degree make me complicit?”

Here’s a snapshot of the arguments ricocheting around Zoom office hours:

• **Promote safety or stifle innovation?** Some faculty argue airing extinction scenarios cultivates careful, cautious engineers—the ones who will embed kill switches and fairness audits from day one.

• **Talent drain reality check.** Others worry that crying wolf starves critical fields like AI safety and cybersecurity of fresh minds right when we need them most.

• **Economic ripple effects.** Outside academia, venture capitalists wring hands over pipeline shrinkage—fewer grads means higher salaries and slower product cycles, potentially undercutting U.S. competitiveness.

Tension peaked after a leaked MIT department email encouraged professors to “acknowledge student fears,” accompanied by optional evening workshops on AI governance. Within two hours the signup sheet hit capacity and crashed the server.

From Campus Chatter to Global Conversation

Student voices quickly leaked beyond academic firewalls. By 10 p.m. EST, Reddit’s r/artificial thread titled *“MIT Dropouts Because of AI? Anyone Else?”* exploded to 4.7k upvotes. Anecdotes rolled in: one junior accepted an internship at Anthropic expressly to “work on alignment,” while another switched from machine learning to public health, admitting, “I’d rather cure cancer than accidentally cause it.”

Hashtags morphed overnight. #QuitCoding went viral on TikTok, pairing dark humor—students joking about running avocado farms—with sobering confessionals shot in dorm stairwells. Influencers rushed to weigh in. AI ethicist Timnit Gebru tweeted: “Good. Fear can be productive. Ask any climate scientist.” Tech-bro streamers countered with, “Scared money don’t make money.”

Signs point to longer-term impact. Google Trends shows “AI safety” searches up 300% since the Forbes article. Summer program admissions at Stanford’s Center for AI Safety report a 40% surge in applications, many from sophomores planning gap years. Headlines now frame the situation not as fringe anxiety, but as generational inflection.

So, radical shift or soon-forgotten moral panic? That hinges less on sensational headlines and more on how quickly institutions integrate ethics into core curricula. If tomorrow’s syllabi teach students to build safeguards while they build models, the exodus may reverse itself. If not, the smartest 20-year-olds just might decide the safest bet is to sit this revolution out.

Ready to pick a side—or rewrite the script yourself?