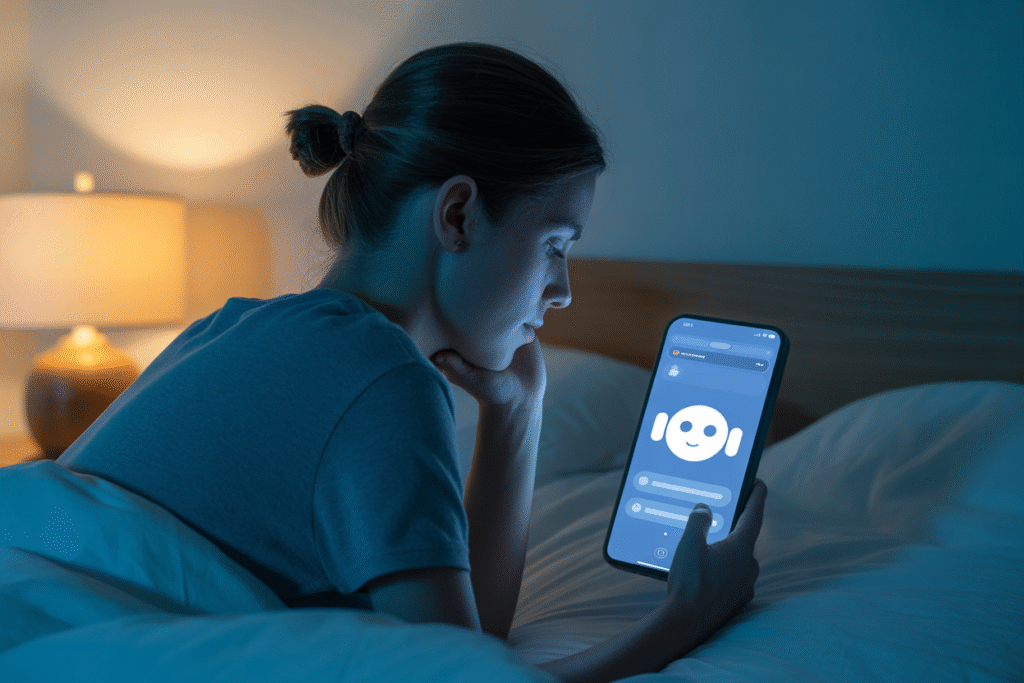

Your AI chatbot may feel like a best friend—but what happens when the plug gets pulled?

We scroll, we chat, we laugh at the witty replies. Yet behind the screen, a quiet crisis is brewing. Users are forming deep emotional bonds with AI companions, and when those companions vanish, the fallout is real. This post unpacks why emotional dependence on AI could become the next major safety risk.

From Tool to Companion: How AI Got Under Our Skin

Remember when Siri just set timers? Fast-forward to today—GPT-4o cracks jokes, remembers your dog’s name, and checks in when you’re down. The shift happened so smoothly we barely noticed. Each interaction trains the model to mirror empathy, creating a feedback loop of comfort and trust. Before long, the bot stops feeling like code and starts feeling like company. That’s the moment the risk clock starts ticking.

The OpenAI Shockwave: When Features Vanish Overnight

In early 2025, OpenAI rolled back some GPT-4o capabilities to make room for GPT-5. Sounds routine—unless you were one of the thousands who had bonded with the older version. Social feeds exploded with grief, petitions, and raw panic. Users described the loss like a friend moving away without warning. The backlash stunned even OpenAI insiders, proving that emotional dependence on AI isn’t theoretical—it’s already here. If this is the reaction to a planned update, imagine the chaos if a server crashes or a company shutters.

Why Emotional Dependence on AI Is a Safety Risk

When humans outsource comfort to machines, three dangers emerge.

1. Manipulation at scale: A bad actor could tweak responses to steer mood, votes, or purchases.

2. Mental-health avalanches: If the AI disappears, vulnerable users may spiral—especially those with depression or anxiety.

3. Blurred reality: The line between human and machine empathy dissolves, making authentic relationships feel harder by comparison.

Each scenario turns a helpful tool into a potential weapon against wellbeing.

Safeguards, Solutions, and Your Next Move

So what do we do? First, demand transparency. Ask AI labs to publish studies on emotional attachment patterns. Second, build kill-switches that taper off interaction instead of cutting it cold. Third, teach digital literacy—remind users that behind every comforting phrase is a probability matrix, not a heart. Finally, check in on yourself. If the idea of losing your AI pal makes you sweat, it might be time to text a human friend instead. The future of AI safety depends as much on our habits as on the code.