Israel’s GIDEON AI is scanning social media for “threat language” in real time—raising urgent questions about privacy, religion, and the ethics of pre-crime policing.

Just hours after the Minnesota school shooting, a former IDF operative stepped onto prime-time television and quietly unveiled GIDEON—an AI engine already scraping every tweet, prayer, and meme for signs of future violence. Within minutes, timelines lit up with two words: surveillance state. This post unpacks why GIDEON AI surveillance is suddenly the most talked-about—and feared—technology in religion, morality, and civil rights circles.

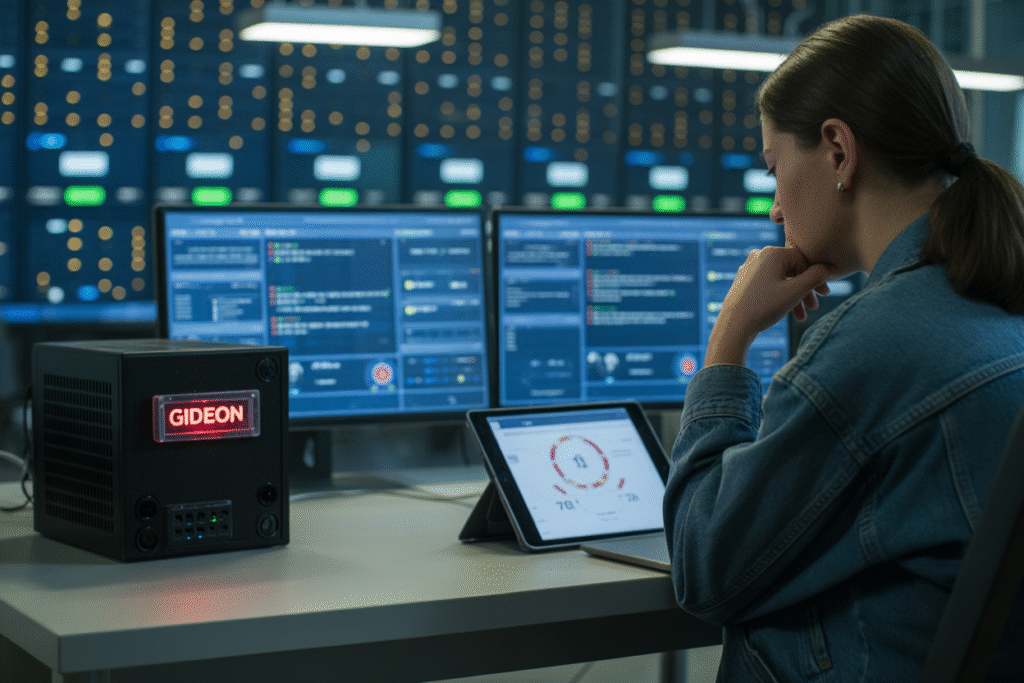

The 60-Second Origin Story

Aaron Cohen, ex-IDF and current Fox News analyst, held up a black smartphone and called it “Israel-grade ontology.”

Behind him, a graphic spelled out GIDEON in block letters. In plain English, the system ingests every public post, forum thread, and encrypted chat it can reach, then flags phrases like “martyrdom,” “jihad,” or even “I’m done.”

Cohen claimed it could have stopped the Minnesota tragedy. Critics heard something else: pre-crime policing on American soil.

How GIDEON AI Surveillance Actually Works

Think of GIDEON as a 24/7 digital beat cop with a photographic memory.

It never sleeps, forgets, or asks for a warrant. Instead, it uses natural-language models trained on millions of posts in Hebrew, Arabic, English, and Spanish.

Here’s the three-step flow:

1. Scrape: Pulls text from Twitter, Telegram, Discord, TikTok comments, and niche religious forums.

2. Score: Assigns a risk score from 0 to 100 based on keyword density, sentiment, and context.

3. Route: Sends high-score alerts to fusion centers, school resource officers, or—in pilot programs—local clergy.

The kicker? Palantir provides the cloud backbone, meaning your data hops from your phone to an Israeli server to aU.S. police dashboard in under five seconds.

The Moral Minefield

Is it ethical to arrest—or even flag—someone for a tweet they haven’t written yet?

Religious leaders are split. Some see a moral imperative: if AI can save even one child, we must use it. Others hear echoes of Minority Report and worry about criminalizing prayer language.

Picture a youth pastor posting Revelation commentary that mentions “martyrs.” GIDEON AI surveillance could flag it as extremist content.

Now imagine that pastor visited by armed officers during Sunday service. The chilling effect on free speech—and free worship—is immediate.

Voices From the Front Lines

Glenn Greenwald devoted an entire livestream to the topic, calling the rollout “textbook shock doctrine.”

Melania Trump issued a statement urging “pre-emptive intervention” in homes and schools, amplifying the sense of inevitability.

Meanwhile, on X, user @NavigatingTheLies posted a thread claiming GIDEON is “training data to suppress biblical Christianity.” The thread gained 27,000 views in two hours.

Each voice adds urgency: Are we trading liberty for an algorithmic promise of safety?

What Happens Next—and How to Push Back

Congress is already drafting bills to regulate AI surveillance, but lobbyists from Palantir and defense contractors are circling.

If you’re a faith leader, consider hosting a town-hall on digital ethics. If you’re a parent, ask your school board if they’re piloting GIDEON.

Want to stay informed? Follow watchdog groups like the Electronic Frontier Foundation and sign up for alerts on AI surveillance legislation.

Speak up now—before the algorithm speaks for you.