Thousands of student AI alerts every week—but half are jokes and most aren’t threats—so who’s really being watched?

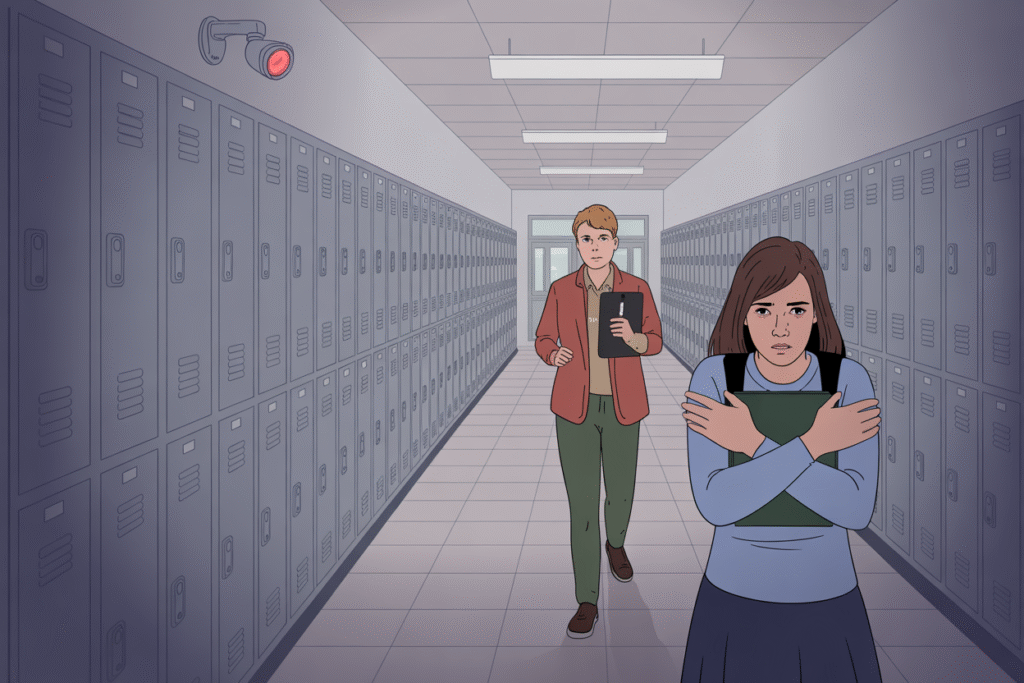

An eighth-grader cracks a joke on Instagram and ends up in handcuffs. Teachers cheer the 24/7 system that flagged him. Welcome to the newest classroom controversy—AI surveillance in schools. In the last three hours alone, the debates have moved from closed-door meetings to trending threads on X. Below, we break down what happened, why people are furious, and five burning questions nobody is answering.

The Canary That Screamed Wolf: One Kid’s Story

Last week in Knoxville, a 13-year-old named Malik shared a GIF of exploding fireworks captioned “I’m lit” on Snapchat.

Within fourteen minutes, Gaggle—one of the AI scanners most districts now lease—flagged the post for the keyword phrase. A school resource officer escorted the boy out of geometry class, questioned him without parents present, and held him overnight in juvenile detention. The fireworks? Homecoming party. The aftermath? Charges dropped, parents furious, district insisting the AI is “working as designed.”

Multiply Malik by the 100,000 alerts pouring into U.S. schools every single week, and you glimpse why “school AI surveillance risks” just rocketed up Google Trends.

AI’s Report Card Gets a Big Red F

AP News just dropped its investigation into platforms like GoGuardian, Bark, and Lightspeed Alert—software now monitoring 4 million American K-12 students.

The data is blunt:

• 1.8 billion items scanned every day.

• 70 % of alerts are slang or inside jokes labeled suicidal or threatening.

• Black students are disproportionately flagged—by margins that rival existing racial discipline gaps.

• Four in ten teachers admit they “ignore the red flag half the time” because the system cries wolf.

Meanwhile, the vendors trumpet saves: a suicidal teen’s intercepted message, a would-be shooter stopped. But critics ask, if a metal detector went off every time someone wore a belt, would we still call that success?

The Quiet Shift: When Laptops Become Snitches

Remember privacy stickers over webcams? Districts flipped the lens back on us.

AI tools sit at the OS level and read everything—Google Docs drafts, classroom chat in Canvas, private Notes apps. They even scrape audio from mic-enabled remote learning sessions. Because the tech is sold as “student safety,” FERPA technically grants blanket consent during district device use—leaving parents shocked when screenshots surface in suspension hearings.

One Florida teen learned his weekend Minecraft server banter about the new “Fortnite drop” had been screen-captured and filed as a “weapons threat.” He now jokes that his laptop is the “Narcs in Mac.” Funny line until you realize the joke earned him a permanent disciplinary note in his transcript.

Ethics on the Chalkboard: Three Clashing Views

Walk the hallway conversations and you’ll spot three camps using the same three letters—AI—like totally different spellings.

Pro-Safety Teachers:

• Claim a single prevented tragedy outweighs a thousand false positives.

• Push for expanded mood-tracking cameras and biometric scans.

• Fear liability lawsuits if they miss the next red flag.

Digital Rights Students:

• Start memes comparing classroom software to Stasi tactics.

• Organize walkouts on #HandsOffMyDrive days.

• Argue mental health declines under constant algorithmic judgment.

Overwhelmed Admins:

• Trapped between vocal parents on both ends and zero state guidelines.

• Quietly delete old disciplinary flags when lawyers threaten subpoenas.

• Fundraise for “ethics audits” they privately suspect will never come.

The irony? Everyone insists they care about kids, yet the loudest voices seem to be the profit charts on the vendor pitch decks.

Will Your District Be Next? Smart Questions to Ask Tonight

Change starts with a single parent at a Monday board meeting asking better questions than “Is this safe?”

Try these instead:

1. Who trains the algorithm on our kids’ data and when was the last bias audit?

2. Can I opt my child out without stripping them of required devices?

3. Where are the false-positive numbers published and what’s the threshold for removal?

4. Does the district keep audio or biometric data, and for how long?

5. What recourse exists when an innocent student gets branded by a glitch?

Pop those questions into the superintendent’s inbox—polite, curious, relentless—because the contract renewals are signed at 6 p.m. Thursday and public comment closes twenty-four hours before. You may not stop the cameras, but you can stop the silence.

Speak up, share this link with three parents who haven’t seen the scan, and let’s push the AI debate from viral outrage to actual policy.