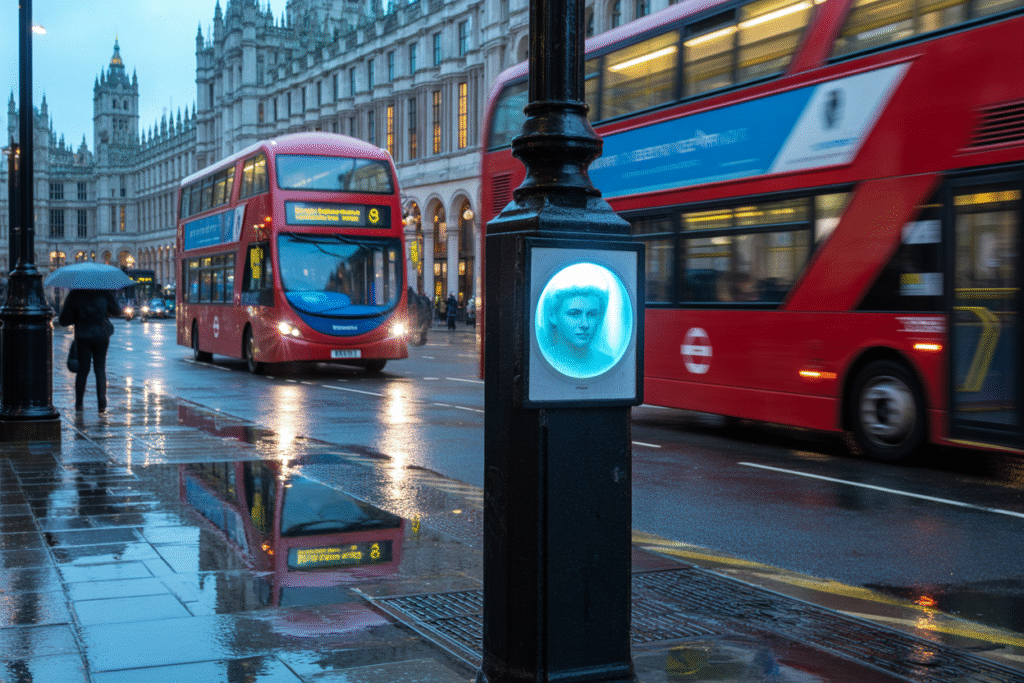

Eight wrongful arrests, one chilling boast, and a city under algorithmic watch—London’s latest AI ethics scandal unpacked.

Imagine strolling through Covent Garden, latte in hand, when a discreet camera flags you as a wanted burglar. Eight Londoners lived that nightmare this year, and the Met’s response was to call the error rate “low.” In the next five minutes you’ll learn why eight isn’t a comfort number, how AI surveillance ethics are being rewritten on our streets, and what you can do before the cameras turn your way.

The Boast That Broke the Internet

On a grey Friday morning Big Brother Watch dropped a 45-second clip that detonated across X. A senior Met officer, clipboard in hand, casually tells reporters, “Only eight misidentifications in 2025.”

Only eight. The phrase ricocheted through group chats, legal Slack channels, and late-night talk shows. Civil-rights lawyer Maya Patel summed up the mood in three words: “Only eight lives.”

The video ends with a freeze-frame of the officer’s half-smile, captioned in bold red: “Eight too many.” Within three hours the post hit 21 000 likes and 3 400 retweets, proving that AI ethics isn’t niche—it’s front-page.

Eight Faces, Eight Stories

Behind the statistic are real humans. There’s Amina, a 27-year-old teacher who missed her own engagement party after being detained outside Waterloo Station. Or David, a 62-year-old jazz musician who spent six hours in a holding cell because the algorithm matched his beard to a robbery suspect.

Each wrongful arrest triggers a domino effect: lost wages, legal fees, reputational damage. The Met currently offers no automatic compensation, leaving victims to sue for damages that can take years to materialise.

Psychologists note a new term cropping up in therapy rooms: “algorithmic trauma,” the unique stress of being judged by an invisible, unaccountable machine.

How the Tech Actually Works (and Fails)

Live facial recognition (LFR) cameras scan every face in range, convert features into numerical vectors, then compare those vectors against watchlists. Sounds sci-fi, yet the devil lives in the training data.

Most datasets skew white, male, and under forty. Darker skin tones return higher false-match rates—up to 34 % in some US studies. London’s diversity amplifies that bias.

Weather messes with accuracy too. Low winter sun, rain-slick hoods, and face masks all slice precision. The Met admits these variables but insists the system is “operationally sound.” Critics reply that “sound” shouldn’t mean eight innocent people in cuffs.

The Legal Vacuum

The UK currently has no primary legislation governing police use of facial recognition. Instead, forces rely on a patchwork of common-law powers and the 2018 Data Protection Act.

Three pending judicial reviews could change everything. One case argues LFR breaches Article 8 of the Human Rights Act—respect for private life. Another contends it violates the Public Sector Equality Duty by disproportionately targeting Black Londoners.

Meanwhile MPs are debating the long-delayed Surveillance Camera Code. If passed, it would mandate bias audits, public impact assessments, and compensation schemes. Until then, the cameras roll on.

What Happens Next—and How to Push Back

Short term: expect more deployments. The Met has budgeted £3 million for additional LFR vans through 2026. Long term: the tech could expand to retail hubs, stadiums, and schools.

You’re not powerless. Start local: file Freedom of Information requests asking your council if LFR is planned for your high street. Support organisations like Liberty and Big Brother Watch—they’re crowd-funding test-case litigation.

On social media, tag your MP every time a new misidentification surfaces. Public pressure works; after sustained backlash, the Met paused a 2024 rollout in Stratford.

Want to go deeper? Download the free Facial Recognition Impact Toolkit from the Ada Lovelace Institute. It walks citizens through spotting deployments, documenting incidents, and submitting evidence to regulators.

The cameras are watching. Make sure we’re watching back.