A fresh NATO-Palantir pact is igniting global outrage over AI in military warfare, ethics, and surveillance risks.

Picture this: it’s 2025, and NATO just handed the keys to its next-gen defense brain to Palantir, the data-mining giant chaired by Peter Thiel. Within hours, activists, ethicists, and Reddit threads exploded. Is this the dawn of safer battlefields—or the quiet birth of algorithmic warfare? Let’s unpack the firestorm.

The Deal That Dropped Like a Bomb

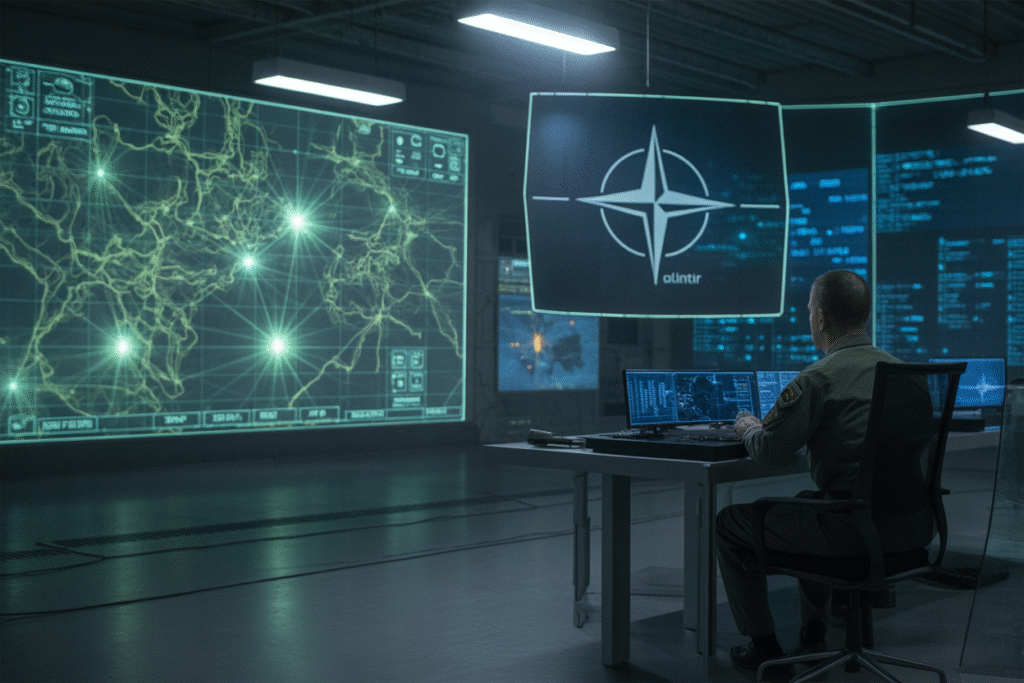

Yesterday, NATO quietly signed a multi-year contract with Palantir to deploy AI decision-support systems across allied command centers. The goal? Faster threat detection, sharper logistics, and real-time battlefield analytics.

Palantir’s stock ticked up, but the internet lost its mind. Why? Because the same company that helped ICE track migrants is now set to guide missile trajectories and troop movements. Activists call it a moral red line; generals call it overdue modernization.

Reddit’s r/realtech thread on the story hit 64 upvotes in three hours—small numbers, massive sentiment. One user wrote, “We’re letting Thiel’s code decide who lives or dies.” Another replied, “Better AI than human error.” The debate is raw, and it’s only getting louder.

From ICE Raids to Artillery Ranges

Palantir’s tech isn’t new to controversy. Its Gotham platform has powered immigration raids, predictive policing, and corporate surveillance for years. Now, the same data-fusion engine will ingest satellite feeds, drone footage, and social-media chatter to advise NATO commanders.

Critics fear mission creep. If Palantir can flag an undocumented family in Chicago, what stops it from flagging a village in Eastern Europe as a “high-risk cluster”? The datasets are different, but the logic—pattern recognition at scale—remains identical.

Supporters argue that military AI in warfare can reduce civilian casualties by sharpening targeting precision. Yet history shows that better tools rarely stay in ethical lanes. Remember when GPS was just for navigation? Now it guides loitering munitions.

Ethics on the Battlefield: Who Pulls the Trigger?

Autonomous weapons grab headlines, but decision-support systems are the silent revolution. These AI aides crunch variables—weather, troop morale, collateral estimates—in seconds, then recommend strike packages or withdrawal routes.

Sounds efficient, right? The catch is moral deskilling. When officers grow accustomed to algorithmic advice, human judgment atrophies. A 2024 ICRC study warns that rapid AI prompts can create peer pressure to act faster, sidelining ethical pause.

Imagine a commander staring at a screen that flashes 92% probability of enemy presence, 3% civilian risk. Does she override the green light? Does she even know how the 3% was calculated? The chain of accountability blurs, and that’s where ethics in military AI gets messy.

Job Displacement and the New War Room

Every algorithmic upgrade triggers a human downgrade. Analysts who once parsed drone footage for eight hours straight now watch AI highlight boxes in real time. The upside: fewer burned-out eyes. The downside: fewer jobs.

NATO won’t publish layoff numbers, but defense contractors already advertise “AI-augmented operations” as a selling point to cut personnel costs. Meanwhile, displaced analysts retrain as “AI supervisors,” babysitting models they barely understand.

The ripple effect hits civilian tech workers too. If Palantir perfects battlefield AI, expect police departments and border agencies to demand the same efficiencies. The line between military and domestic surveillance keeps fading.

Regulation or Runaway Tech?

International law hasn’t kept pace. The Geneva Conventions predate the microchip, and current rules on autonomous weapons focus on “meaningful human control”—a phrase so vague it could cover anything from a joystick to a rubber stamp.

Some states want a binding treaty banning fully autonomous lethal systems. Others, led by the U.S. and Russia, insist existing export controls are enough. Caught in the middle: alliances like NATO that outsource innovation to private firms.

Public pressure may tip the scales. The Reddit thread is already spawning memes, op-eds, and NGO campaigns. If outrage sustains, lawmakers could impose procurement freezes or transparency mandates. If not, Palantir’s code becomes the new normal.

So, what can you do? Start by following the debate, asking hard questions, and demanding that your representatives treat AI in military warfare as a public issue, not a classified footnote. The battlefield of tomorrow is being coded today—and silence is a choice.