Inside the race to deploy autonomous weapons, the promise of faster, cheaper war collides with the nightmare of algorithmic slaughter.

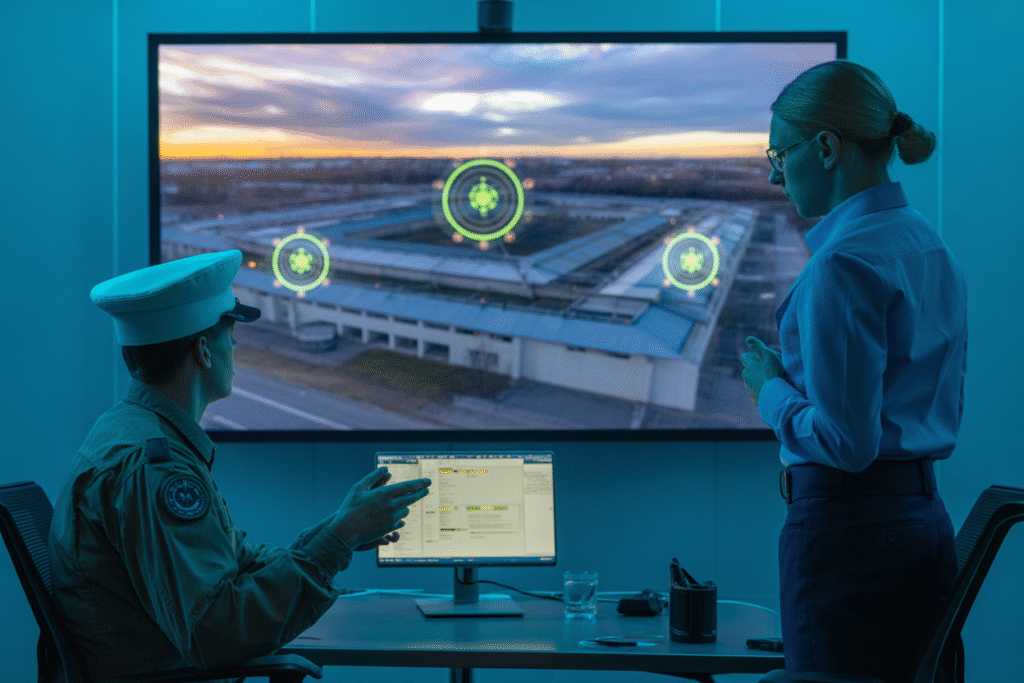

The Pentagon wants thousands of AI drones in the sky by 2026. Israel’s “Lavender” system already picks bombing targets in Gaza. Venture capital is pouring billions into startups promising to make killing more efficient. Is this the future of defense—or the end of human accountability?

The Replicator Rush

Picture a factory floor where drones roll off the line faster than smartphones. That’s the vision behind Replicator, the Pentagon’s flagship program to field swarms of AI-enabled systems within 18 months. Officials say the goal is to out-think and out-maneuver China in any Pacific conflict.

Speed is the selling point. Traditional weapons take years to design, test, and buy. Replicator aims to cut that cycle to months by leaning on Silicon Valley’s “move fast and break things” playbook. If a drone fails, iterate and ship again.

Yet speed has a cost. Less testing means more unknowns. When software—not a pilot—decides when to fire, a single coding error can turn a crowded marketplace into a graveyard. How many civilian lives equal one faster deployment cycle? The Pentagon hasn’t published that equation.

Gaza’s Algorithmic Battlefield

While Replicator gears up, Israel’s military has already handed targeting decisions to machines. The system nicknamed “Lavender” sifts through surveillance data—phone records, social media, facial recognition—to flag alleged militants for airstrikes.

Human officers once spent days cross-checking intelligence. Lavender does it in seconds. The result? A dramatic spike in authorized targets—and in civilian deaths. Over 33,000 Palestinians have been killed since October 2023, according to Gaza health officials. Many strikes hit apartment blocks, schools, and hospitals.

Critics call it death by spreadsheet. Supporters call it operational necessity. Either way, the moral buffer between soldier and decision has thinned to a line of code. When an algorithm mislabels a teenager as a combatant, who pulls the trigger? Who carries the guilt? The answers are murkier than the smoke rising over Gaza.

Silicon Valley’s War Chest

Defense tech used to be the domain of giants like Lockheed and Raytheon. Today, venture firms such as Andreessen Horowitz write checks to twenty-something founders who promise to “democratize” military AI. The pitch decks are slick: reduce costs, save American lives, deter adversaries.

Investors see a trillion-dollar market. Startups see a green light to experiment with lethal autonomy. One firm advertises drones that “learn” enemy patterns in real time. Another offers predictive analytics that rank villages by insurgent probability.

The money flows quietly. Most contracts are classified, shielded from public debate. Meanwhile, engineers who once built photo-sharing apps now tweak neural networks to distinguish a farmer’s shovel from a rocket launcher at 10,000 feet. Is this the career they dreamed of in college? Some say yes; others leave, haunted by late-night Slack messages about collateral-damage estimates.

The Accountability Gap

International law is clear: a human must remain “in the loop” for lethal decisions. Reality is messier. When an AI flags a target and a stressed officer has thirty seconds to approve, how meaningful is that loop?

Military lawyers argue that existing rules suffice. Human-rights groups disagree. They warn of a slippery slope toward fully autonomous weapons—machines that choose, aim, and fire without any human veto. The Campaign to Stop Killer Robots has pushed for a global ban since 2013. Progress has been glacial.

Congress is waking up. A bipartisan bill would require Pentagon AI systems to pass an annual ethics audit. Critics call it toothless; the Defense Department already conducts internal reviews. Still, the symbolism matters. For the first time, lawmakers are asking whether national security can coexist with algorithmic accountability.

What Happens Next?

Imagine a 2027 skirmish in the South China Sea. Swarms of Replicator drones buzz overhead, each running code updated the night before. A Chinese vessel broadcasts a spoofed signal; an American drone interprets it as hostile and launches a missile. Minutes later, retaliation strikes a U.S. base. No human ordered the first shot.

Scenarios like this keep ethicists awake. They also fuel a quieter debate inside the Pentagon: should the U.S. declare a moratorium on fully autonomous weapons, even if rivals refuse? Some generals say restraint is weakness. Others argue that the first country to trust machines with nuclear-scale decisions may trigger an arms race no one can control.

The clock is ticking. Every algorithm deployed today sets a precedent for tomorrow’s wars. Citizens, coders, and commanders alike must decide: will we write rules that protect humanity, or code that replaces it? The answer may arrive faster than we think.