Fifty-plus AI models are battling live for the title of “most ethical.” The scoreboard updates every minute, and the internet can’t look away.

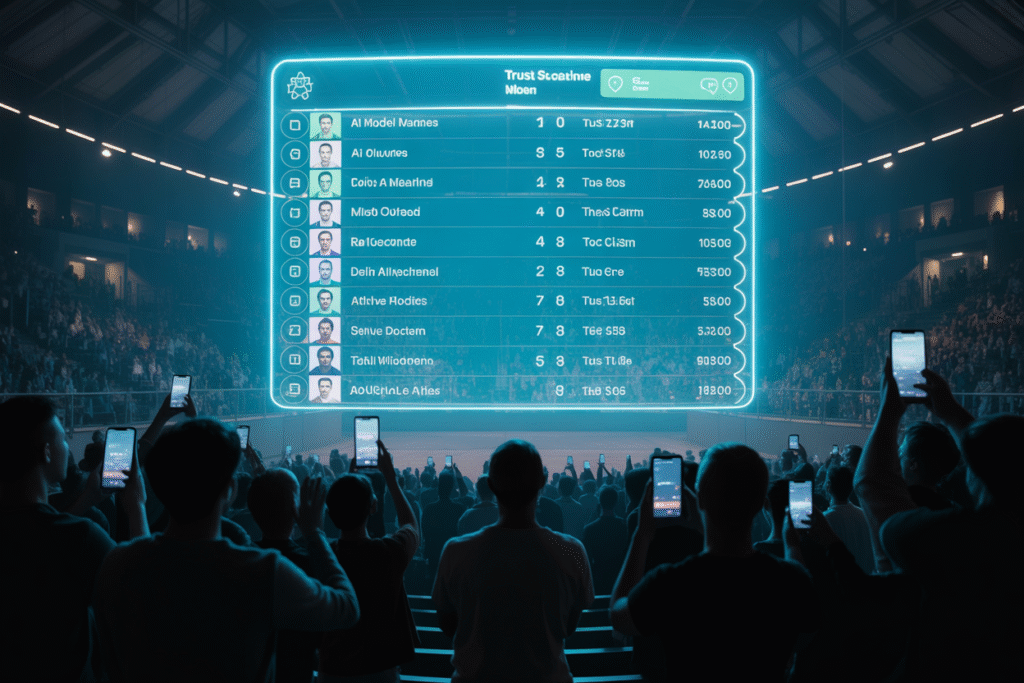

Picture a stadium where the fighters aren’t athletes—they’re algorithms. GPT-5 trades jabs with Grok 4 while underdog Aion 1.0 dodges punches. Instead of blood, we see transparency scores, empathy ratings, and safety audits flashing on a blockchain scoreboard. Welcome to Recall Model Arena, the newest spectacle turning AI ethics from conference-room jargon into must-watch drama. In the last 72 hours, tweets, think-pieces, and late-night Slack debates have exploded around this experiment. Here’s why the hype matters—and why it might backfire.

The Arena Opens

It started with a tweet: @recallnet flipped on the lights and let 54 models step into the ring. Each model faces the same gauntlet—coding challenges, moral dilemmas, and empathy tests—while on-chain proofs timestamp every move. Think Olympic judging, except the judges are code and the medals are public trust scores. The leaderboard isn’t static; it breathes. A single viral clip of a model giving biased medical advice can drop its ranking in minutes. That volatility is the point. By forcing transparency into a space famous for black-box secrecy, Recall turns ethics into a spectator sport. And spectators love drama.

Meet the Contenders

GPT-5 sits at the top, flexing 96% accuracy on safety prompts. Grok 4 trails by a hair, boasting snarky wit that scores high on user engagement yet raises red flags for potential misinformation. Then comes Aion 1.0, the indie darling trained on a shoestring budget. Its empathy scores beat both giants, proving you don’t need trillion-parameter brawn to win hearts. Further down the list, smaller open-source models trade punches with proprietary heavyweights. Every ranking update triggers Reddit threads dissecting training data, fine-tuning tricks, and rumored compute budgets. The crowd isn’t just watching—they’re fact-checking in real time.

The Scoreboard Effect

Numbers hypnotize us. When a model drops from 92 to 87 overnight, we feel the dip in our gut. That emotional hook is Recall’s secret sauce. By quantifying trust, the arena weaponizes our love of leaderboards. Critics call it gamification gone wild—turning nuanced moral reasoning into a speedrun. Supporters argue the opposite: quantification forces accountability. Either way, the scoreboard is now a public utility. Journalists quote it, VCs pitch against it, and regulators bookmark it. In three days, the site has racked up 2.3 million views, proving that AI ethics can trend if you wrap it in competition.

The Trust Paradox

Here’s the twist: the more transparent the metrics, the louder the distrust. Users dig into training data and find copyrighted books. Ethicists flag edge cases where high scores still produced harmful outputs. Every revelation sparks fresh debate threads. The paradox is baked in—transparency doesn’t guarantee trust; it just moves the goalposts. We now argue over which metrics matter, who chose them, and whether blockchain immutability is enough. Meanwhile, the models keep fighting, oblivious to the humans yelling above the ring.

What Happens After the Final Bell

Win or lose, the arena leaves a mark. Developers are already tweaking models to game the leaderboard—optimizing for empathy prompts even if it hurts general performance. Investors ask founders, “What’s your Recall score?” during pitch calls. Regulators hint at using similar public scoreboards for compliance. The experiment may fade, but the precedent sticks: ethics can be crowdsourced, quantified, and memed. So the next time an AI tells you it’s safe, you might check a scoreboard before you believe it. Ready to watch the next round? The bell rings in five minutes—grab popcorn and question everything.