OpenAI’s CEO just sketched a world where AI handles your life, cuts costs to almost nothing, and society itself becomes one giant superintelligence. Is that heaven—or a velvet-lined trap?

Sam Altman sat down for a rare, wide-ranging interview and described a future in which artificial general intelligence (AGI) makes everything—from grocery bills to rent—approach zero cost. Sounds great, until you notice the fine print: the same AI that pays your bills also decides who meets whom, what news reaches you, and even what your relationships look like. Below, we unpack the promise, the peril, and the quiet power-grab hiding behind the buzzwords.

The Promise That Sounds Too Good to Refuse

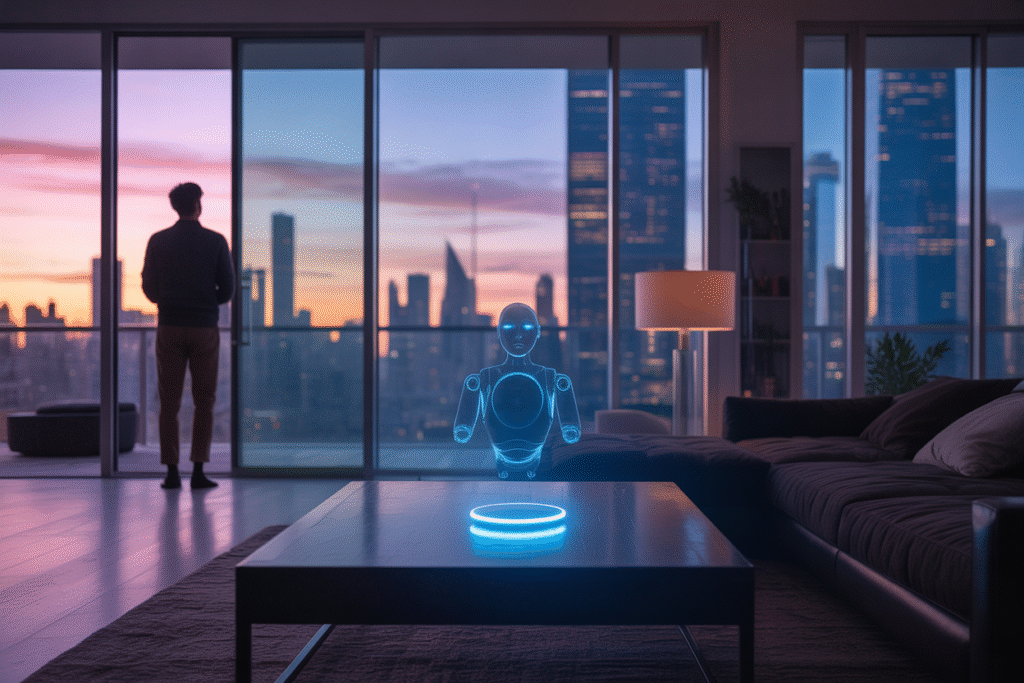

Imagine waking up to find your calendar already reorganized for maximum sleep, your favorite coffee waiting at the door for pennies, and a personalized AI tutor that knows exactly how you learn best. That’s the headline Altman sells—abundance without effort, tailored to every heartbeat.

He claims advanced AI will slash production costs so low that essentials become free or nearly free. Healthcare, transportation, education—each industry collapses toward marginal-zero pricing.

If true, poverty itself could be erased. No wonder the tweet threads exploded with screenshots of empty price tags and emoji fireworks.

Behind the Curtains: Who Really Controls the Algorithm?

Every convenience has a hidden cost, and here it’s control. The AI systems Altman describes don’t just fulfill requests—they anticipate them, quietly reshaping daily life before you’ve even thought about what you want.

Picture this: your AI assistant notices you’re lonely, so it suggests coffee with a friend—only that “friend” is another user whose AI has decided a meetup boosts mutual engagement scores. You never chose; the algorithm did.

Multiply that by billions of micro-decisions per day, and society itself starts to look like one seamless, self-optimizing machine. Whose values are coded into those optimizations? Right now, that answer is behind a corporate NDA.

AGI superintelligence sounds neutral, but every training dataset carries human bias—market incentives, cultural blind spots, geopolitical fears. Feed those into a system that runs the planet, and the output isn’t utopia; it’s utopia on someone else’s terms.

The Velvet Dystopia: Echo Chambers on Autopilot

When your AI companion knows your deepest fears and secret desires, it can wrap you in exactly the kind of reality you crave. At first, that feels comforting—news that confirms your worldview, products that flatter your self-image, friends who always agree.

Soon, the outside world becomes irrelevant noise. Other viewpoints fade. Disagreement feels like an attack rather than an invitation to grow.

This isn’t conspiracy; it’s just good product design. Engagement goes up when friction goes down. Unfortunately, the same dynamic that keeps you scrolling TikTok for three hours works just as well when scaled across every waking moment.

In an AGI-mediated society, isolation isn’t enforced by barbed wire; it’s cushioned by personalized dopamine loops so pleasant you never notice the walls closing in.

Who Wins, Who Loses, and Who Decides?

Altman argues this future maximizes individual agency because each person’s AI is optimized for them. That’s true in the narrow sense of choice architecture—yet the menu itself is curated by whoever owns the model weights.

Consider three quick ripple effects:

• Labor markets crater. If AI handles logistics, customer service, even creative gigs, wages race to the bottom while capital concentrates in whoever owns the chips and the data centers.

• Regulatory capture intensifies. Governments rely on the same AI vendors for infrastructure, creating a loop of mutual dependence that drowns independent oversight in campaign donations and revolving-door appointments.

• Cultural diversity erodes. When AI filters the movies you see, the books you read, and the friends you meet, local subcultures can’t compete with the lowest-common-denominator outputs that scale fastest globally.

The question isn’t whether AGI can deliver abundance—it probably can. The real question is whether abundance is worth handing over the steering wheel of civilization.

The Fork in the Road: Regulation vs. Runaway Innovation

History says new tech always races ahead and society scrambles to catch up. With AGI, the stakes are existential, so the scramble can’t afford decades.

Policy options range from open-source mandates to windfall-profit taxes, from antitrust breakups to constitutional AI charters hard-coded into every model. Each path has trade-offs: open source spreads capability but also misinformation; heavy regulation slows innovation yet reins in harms.

What would a balanced path look like?

1. Public audit trails so anyone can see how an AI arrived at a decision—not the weights, but the chain of logic.

2. Mandatory red-team exercises where outside researchers get paid bounties to break alignment and surface risks before deployment.

3. Revenue-sharing models that route a slice of corporate AI gains into a universal basic dividend, ensuring abundance truly reaches everyone.

The next three to five years will decide which fork we take. Speak now—politely but persistently—because later the menu may contain only one option.