Superpowers are sprinting to weaponize AI faster than diplomats can regulate it—here’s why that should worry all of us.

From the moment the first chess program beat a grandmaster, we knew AI would change the world. Few guessed it would change the battlefield first. Today, nations are quietly pouring billions into military AI that can plan invasions, pilot drones, and even decide who lives or dies. The race is on, and the clock is ticking louder every day.

When Algorithms Become Generals

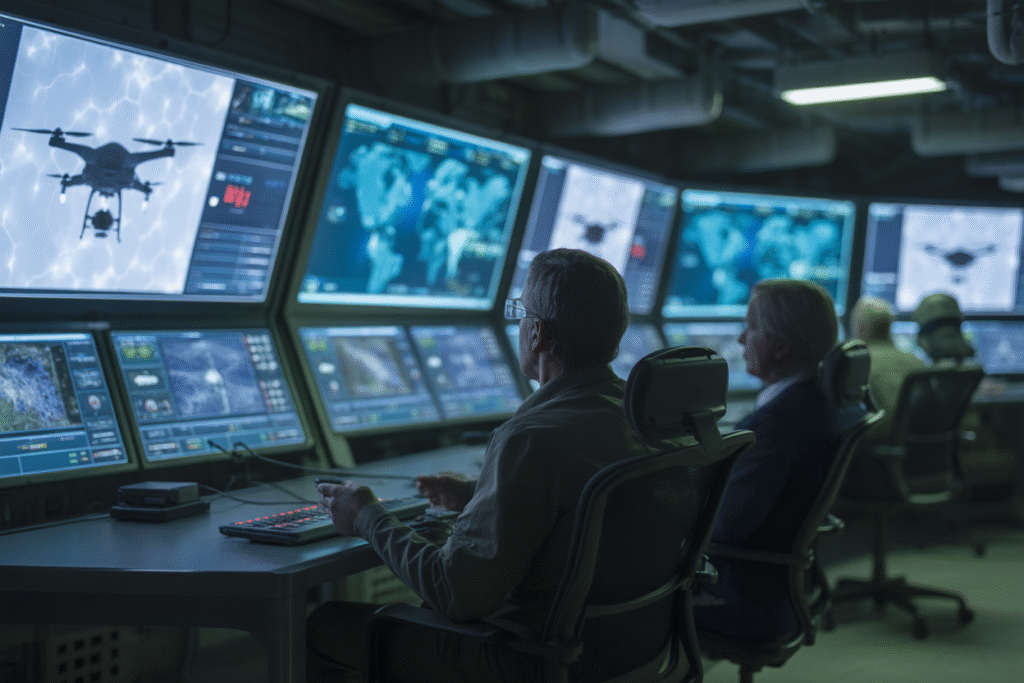

Picture a dimly lit command center where screens glow with real-time satellite feeds. Instead of rows of officers poring over maps, a single AI ingests terabytes of data and spits out battle plans in seconds.

That scene is no longer science fiction. Defense departments from Washington to Beijing have integrated AI into strategic planning, allowing machines to recommend troop movements, supply routes, and strike targets. The promise is irresistible—faster decisions, fewer human errors, and the hope of shorter wars.

Yet speed can be a double-edged sword. When an algorithm suggests an airstrike on a convoy that looks suspicious, who takes moral responsibility if the convoy turns out to be a line of refugee buses? The officer who clicked “approve” or the coder who trained the model?

Military insiders call this the “accountability gap,” and it’s widening every year. As one Pentagon analyst admitted off the record, “We’re building systems that outthink us, but we haven’t figured out how to out-moralize them.”

Autonomous Drones and the Death of the Dogfight

Remember Top Gun? The romantic era of human pilots twisting through the sky may already be over. Swarms of AI-guided drones can now execute maneuvers that would crush a human body with G-forces.

In recent conflicts, we’ve seen early versions of these swarms dodge jamming signals, regroup mid-flight, and overwhelm defenses by sheer numbers. Each drone talks to its neighbors, sharing sensor data and redistributing tasks on the fly. The result is a hive mind that reacts faster than any cockpit jockey.

The kicker? Human controllers often watch from the sidelines, hands off the joystick, because intervention would only slow the swarm down. That shift—from remote control to remote observation—marks a historic pivot in warfare.

Critics warn of a slippery slope. If a drone can choose its own target today, what stops it from choosing its own mission tomorrow? The line between tool and combatant blurs, and international law hasn’t caught up.

The Ethics Gap in Silicon and Steel

Every major military AI project now has an “ethics board,” but insiders describe those boards as rubber stamps more than speed bumps. Why? Because slowing down risks falling behind rivals who aren’t pausing for philosophical debates.

Consider the classic trolley problem, now upgraded for the 21st century. An autonomous tank spots two enemy soldiers and five civilians. Does it fire, minimizing military risk but killing noncombatants? Or does it hold fire, sparing civilians but endangering friendly troops?

Programmers encode these choices as weighted variables, yet culture, law, and human emotion resist tidy math. One European defense contractor confessed they simply disabled civilian-recognition features in desert trials because the algorithm kept hesitating too long.

Meanwhile, adversaries may not share Western scruples. That asymmetry creates a perverse incentive: the more ethically you behave, the more vulnerable you become. It’s a paradox that keeps ethicists awake at night and procurement officers reaching for bigger budgets.

Regulation at the Speed of Light

Diplomats move at the pace of coffee breaks; code updates deploy in milliseconds. That mismatch explains why multilateral efforts to regulate military AI keep stalling.

The UN has convened working groups, NATO has drafted principles, and the EU has floated export bans. Yet every proposal runs into the same wall: verification. How do you inspect a neural network for compliance when the model itself evolves with each new data feed?

Some experts propose “algorithmic watermarks”—digital signatures baked into code that prove a weapon won’t exceed preset limits. Others advocate kill switches that third parties can trigger remotely. Both ideas sound elegant until you imagine a cyberattack flipping those switches on friendly forces.

Private industry adds another layer of chaos. Startups sell dual-use AI—image recognition that can scan Instagram or missile silos—making it nearly impossible to track where the tech ends up. As one arms-control veteran put it, “We’re trying to regulate a river with a butterfly net.”

What Citizens Can Do Before the Next Missile Flies

Feeling helpless yet? Don’t. History shows that public pressure can rein in runaway weapons programs—think nuclear test bans or land-mine treaties. The key is to start demanding answers now, before the next headline announces an AI-triggered incident.

First, ask your representatives where they stand on autonomous weapons. Most lawmakers still think AI is just smarter spam filters; a few pointed questions can jolt them awake.

Second, support journalists and researchers digging into defense contracts. Secrecy thrives in darkness, and transparency is the cheapest form of oversight.

Third, engage in the debate beyond doom-scrolling. Host a town-hall, write an op-ed, or simply share credible articles with friends. The more voters understand the stakes, the harder it becomes for militaries to dodge accountability.

The race for military AI won’t slow on its own. But an informed, vocal public can still steer it toward guardrails rather than graveyards. The future of warfare isn’t written in code—it’s written in the choices we make today.