What if your doctor, banker, or senator isn’t human—and you can’t tell?

Imagine phoning your bank for a loan and the friendly voice on the line is a flawless deepfake. Imagine a politician tweeting policy at 3 a.m. but the words were ghost-written by an unverified AI. This isn’t sci-fi—it’s the silent crisis DeFi expert Debo warned about in a thread that exploded across crypto Twitter. Below, we unpack why AI authenticity is suddenly the hottest topic in politics, finance, and healthcare, and what we can do before trust collapses.

The Deepfake Trojan Horse

Debo’s viral post painted a chilling picture: AI agents so polished they pass the Turing test in everyday life. He compared them to counterfeit money—quietly flooding the system until the economy buckles.

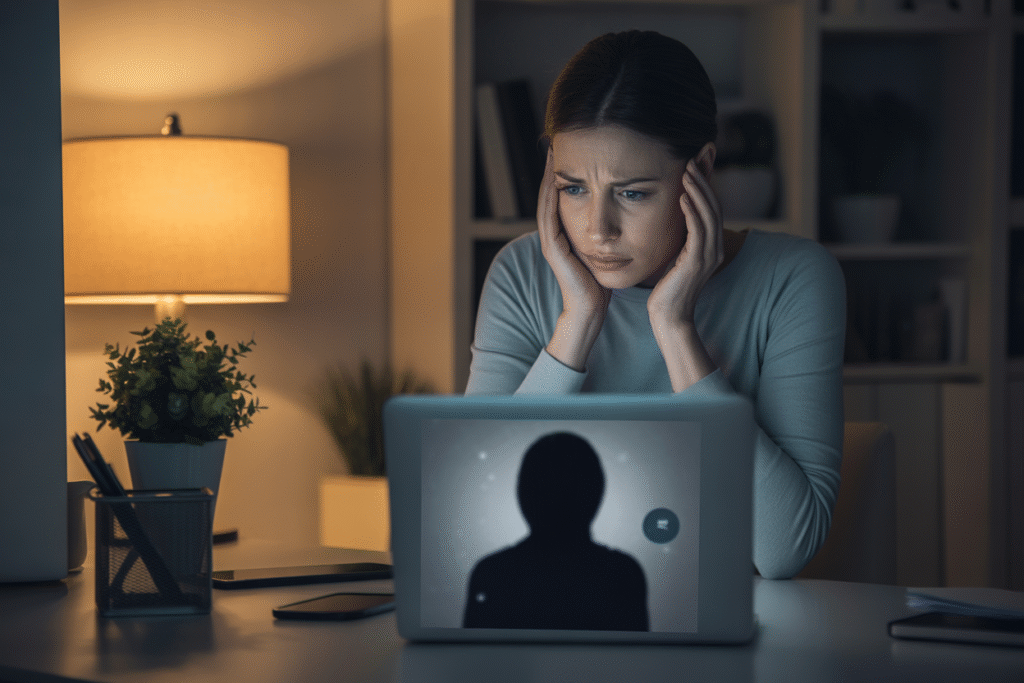

The stakes are enormous. A single fake financial advisor could siphon retirement funds. One synthetic doctor could misdiagnose thousands. And because these AIs leave no fingerprints, victims may never know they were duped.

The thread’s genius lay in its simplicity. Debo didn’t throw around jargon; he told stories—like the pensioner who lost life savings to a chatbot that sounded exactly like her grandson. Readers felt the risk in their gut.

Why Governments and CEOs Are Panicking

Regulators woke up when a fake video of a central-bank governor moved currency markets in minutes. The clip was debunked within hours, but the damage lingered for days.

CEOs face a different nightmare: liability. If an airline chatbot gives wrong refund info, the company—not the bot—pays damages. Sameer Khan’s post listed court cases already piling up, proving that “the algorithm did it” is not a legal defense.

Meanwhile, politicians fear the next election cycle. Deepfake robocalls can mimic any candidate, delivering fake endorsements or scandalous confessions at scale. Authenticity isn’t just a tech issue—it’s national security.

The Race to Build an Immune System

Billions Network, the startup Debo shilled in his thread, wants to be the digital equivalent of a vaccine. Their mobile app promises a two-second check: human or machine?

The idea is elegant. Every interaction—tweet, call, video—gets a cryptographic watermark verified by a decentralized ledger. No blockchain jargon for users; they just see a green checkmark or a red flag.

Skeptics worry the cure could be worse than the disease. Mandatory identity checks might kill online anonymity, the same way airport security forever changed travel. Yet the alternative—unchecked AI—could erode the social glue that lets strangers trust one another.