A viral theory claims Trump is building AI-powered detention centers—fueling fears of a surveillance state takeover.

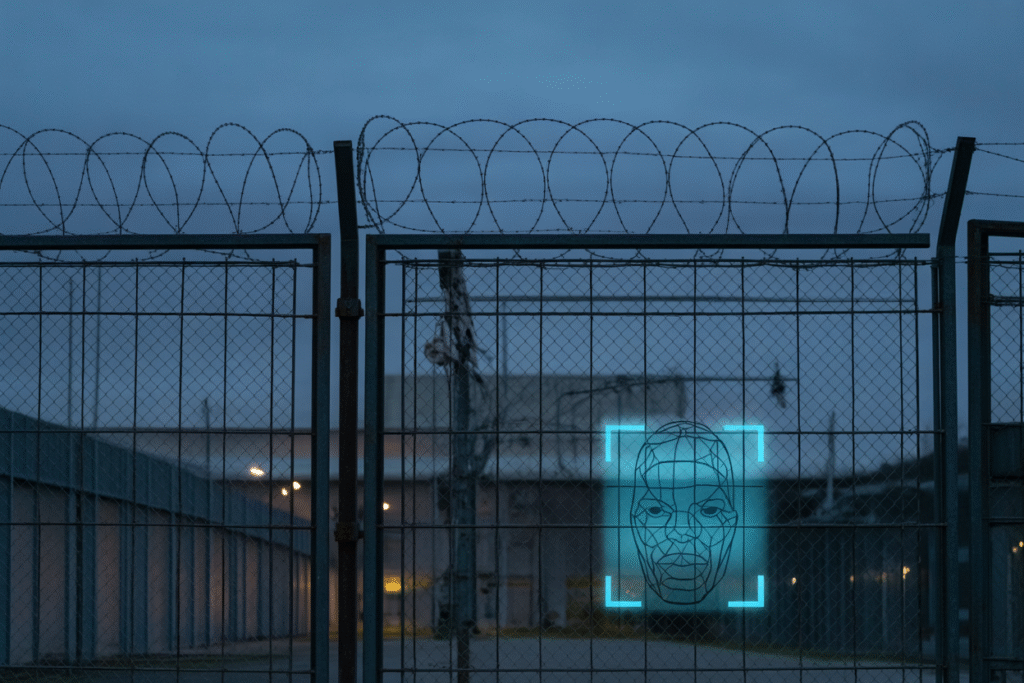

What if the next headline isn’t about politics as usual, but about an AI surveillance system quietly being built to watch us all? A new theory—equal parts chilling and captivating—suggests former President Trump is constructing high-tech detention centers nicknamed “Alligator Alcatraz,” complete with federal police and an ultimate AI surveillance network. The twist? It’s allegedly designed to flip against everyday Americans the moment power changes hands. Let’s unpack the story, the stakes, and why this debate refuses to die.

From Meme to Movement: How the Theory Took Off

It started with a single post. Citizen journalist Diligent Denizen painted a picture straight out of dystopian fiction: sprawling camps, federal agents in unmarked vans, and an AI surveillance grid tracking every face, phone, and footstep. Within hours the thread exploded—167 likes, 55 replies, and thousands of views. Why the traction? Because the story taps a nerve we all feel: the line between safety and control has never been blurrier.

Supporters point to real-world breadcrumbs. Facial recognition already dots city streetlights. Data brokers sell location histories for pennies. Police departments pilot predictive-policing algorithms. When you stitch these fragments together, the leap to “Alligator Alcatraz” feels less like paranoia and more like pattern recognition.

Yet skeptics roll their eyes. They see classic conspiracy DNA: a charismatic figure, a shadowy deep state, and a ticking-clock warning. Still, dismissing the idea outright misses a crucial point—AI surveillance isn’t hypothetical. It’s here, it’s scalable, and whoever holds the switch writes the rules.

The Double-Edged Lens: Pros, Cons, and Unintended Consequences

Imagine AI cameras that can spot a fugitive in a stadium crowd before a single officer blinks. That’s the promise pitched by tech vendors and security hawks. Faster response times, fewer false arrests, and a data trail that keeps everyone honest. On paper, it’s a public-safety jackpot.

But flip the lens. The same system can log every protester’s face, map friendships from phone pings, and quietly flag “suspicious” behavior patterns like loitering near a courthouse. Who decides what counts as suspicious? Who audits the auditors? When accountability diffuses across algorithms and agencies, mistakes become ghost stories—hard to trace, harder to correct.

Then there’s the economic ripple. Human analysts, dispatchers, even beat cops risk displacement as AI systems promise 24/7 vigilance without pensions or coffee breaks. Entire job categories could shrink overnight, widening the very inequality that fuels distrust in government.

And let’s talk politics. If today’s administration vows the tech will only target “bad actors,” what happens when the next team rewrites the definition? History shows that tools forged for one purpose rarely stay in a single pair of hands. Watergate taught us wiretaps can flip. The Patriot Act taught us metadata can metastasize. AI surveillance, scaled nationwide, would be the most powerful switch yet handed over.

What Happens Next: Regulation, Resistance, or Reality Check?

So where do we go from here? Three paths are already forming.

1. The Regulatory Route: Senators like Josh Hawley are probing Meta’s AI governance, signaling that Congress smells smoke. Expect hearings, white papers, and maybe a landmark bill that tries to fence in how biometric data is collected, stored, and shared. The upside—clear rules could build public trust. The downside—lobbyists excel at loopholes.

2. The Resistance Toolkit: Privacy activists push encrypted messaging, facial-recognition-blocking clothing, and local bans on predictive policing. Cities like San Francisco already bar certain AI surveillance tools. The grassroots message is simple: if the feds won’t pump the brakes, communities will.

3. The Reality Check: Tech ethicists warn that the debate itself can become noise that drowns nuance. Not every camera is Big Brother, and not every algorithm is biased. The key is transparent testing, open datasets, and independent audits—boring but vital guardrails that keep innovation from mutating into intrusion.

Which path wins? That depends on how loudly voters demand answers and how quickly lawmakers trade campaign slogans for concrete safeguards. One thing is certain: the AI surveillance genie is already rubbing the lamp. Our next move decides whether it grants wishes or starts taking names.