Inside the trenches, drone pilots are being ordered to fight like infantry—raising urgent questions about ethics, trust, and the future of AI in warfare.

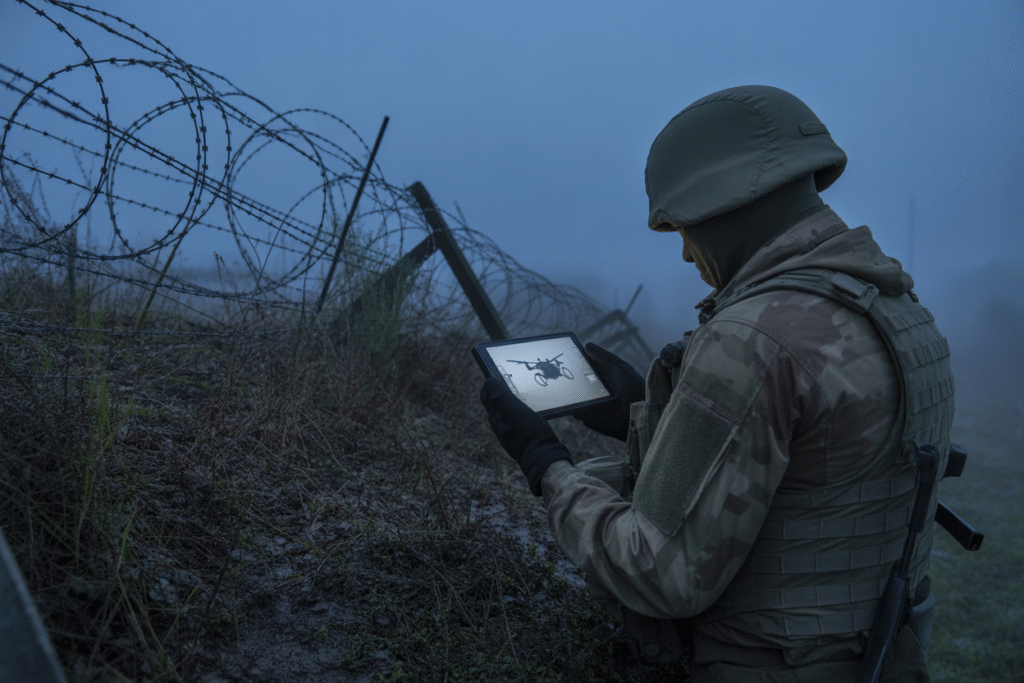

Imagine a drone pilot who trained for months to master cutting-edge AI systems suddenly handed a rifle and told to charge a trench. That exact scenario is playing out in Ukraine right now, and it is sparking a firestorm of debate about how far we should let technology push human soldiers. In this post we unpack the real-time controversy, weigh the ethical stakes, and ask what it means for every army eyeing autonomous weapons.

The Tweet That Started It All

A Ukrainian frontline analyst known as Tatarigami_UA fired off a blunt thread that ricocheted across military forums. He accused commanders of treating drone operators as disposable infantry, sending them into trenches because regular troops were depleted. The post exploded with thousands of likes, retweets, and heated replies from soldiers, veterans, and tech ethicists worldwide. Why did a single tweet ignite such fury? Because it exposed a raw nerve: the fear that high-tech militaries will sacrifice human expertise on the altar of short-term gains.

From Cockpit to Trenches

Specialist drone pilots spend years learning AI-assisted targeting, terrain mapping, and encrypted comms. When those specialists are reassigned to frontline trenches, two things happen. First, the military loses irreplaceable technical know-how. Second, morale plummets—why master cutting-edge AI if you will still end up in a foxhole? Stories are emerging of pilots refusing orders or quietly deserting, eroding trust up and down the chain of command. The irony is brutal: the very technology meant to keep soldiers safer is now pushing them closer to danger.

Ethics on the Battlefield

Every military ethicist is asking the same questions. Is it justifiable to use highly trained technologists as foot soldiers simply because algorithms can now steer drones? Does the promise of AI efficiency excuse the human cost? Critics argue that once commanders view personnel as interchangeable units, the slide toward fully autonomous weapons becomes steeper. Supporters counter that desperate wars demand desperate measures. The debate is no longer academic—it is live-tweeted from bunkers and dissected on late-night news panels.

Recruitment and Retention Crisis

Ukraine is not the only army struggling to fill specialized roles. Across NATO, recruitment ads boast of AI-driven systems that reduce risk, yet real soldiers see drone pilots being treated as expendable. Word travels fast in military chat rooms: if expertise is not valued, why enlist? The result is a quiet but growing retention crisis. Veterans warn that once institutional memory walks out the door, no algorithm can replace it. Meanwhile, adversaries watch and learn, adapting tactics that exploit overextended human operators.

What Happens Next

Short-term fixes—bonuses, better gear, or stricter orders—will not solve a systemic problem. Long-term solutions must balance lethal autonomy with human oversight, ensuring that AI enhances rather than replaces human judgment. Policymakers need transparent guidelines on when and how to deploy autonomous systems, and soldiers need assurance that their unique skills matter. The stakes are global: if Ukraine’s drone dilemma spreads, every army will face the same ethical crossroads. The question is not whether AI will fight future wars, but how much humanity we are willing to keep in the loop.