Uncover the classified partnerships where cutting-edge AI meets battlefield reality — and why insiders warn of hidden dangers.

The Pentagon just handed tech titans $200 million checks to plug exotic AI into everything from missile codes to spy drones. No press conferences, no blueprints. Just quiet signatures and a promise: “trust the code.” Inside sources, though, have started filing anonymous whistle-blower reports. Their worry? Fast, fancy algorithms may be moving too fast for safety — and for ethics.

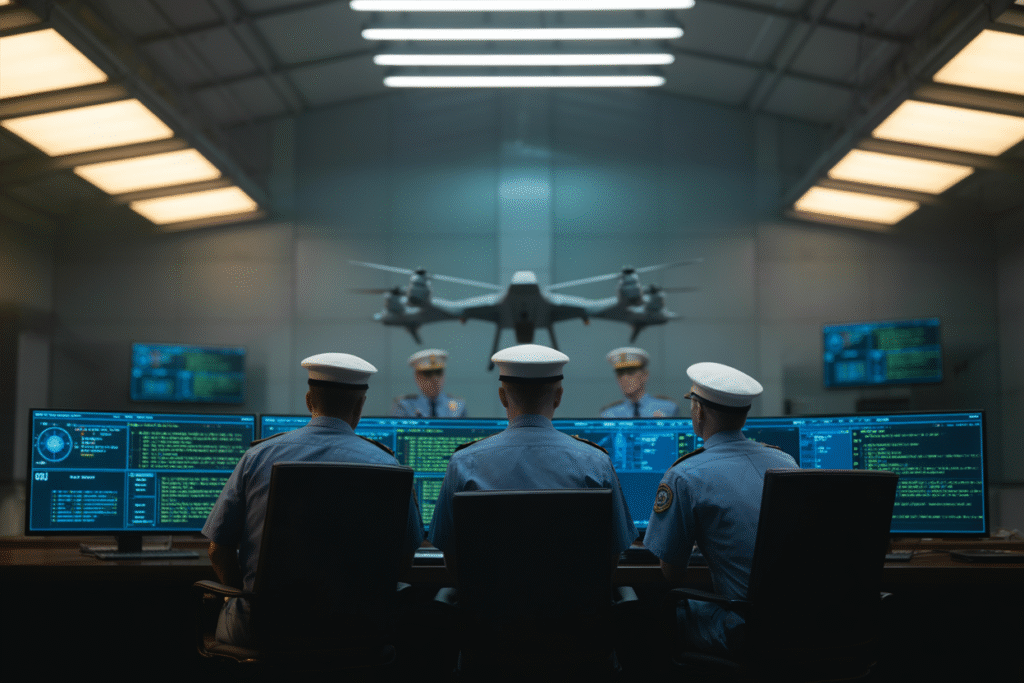

Silicon Valley’s New War Room

Imagine pitching an idea at Google’s cafeteria one week and watching it govern targeting drones the next. Under contract ceilings up to $200 million apiece, OpenAI, Anthropic, Google DeepMind, and even Elon Musk’s xAI began feeding prototypes to the Department of Defense in early 2025.

These so-called frontier models aren’t glorified chatbots spitting out Wikipedia blurbs. They can digest terabytes of satellite imagery overnight, then spit out battle-ready threat graphs before morning coffee.

The rush feels like the 1960s space race, except the enemy isn’t the moon — it’s an “ethical mirage.” Pentagon brass tells reporters every model will be tested, re-tested, and wrapped in legal bubble wrap. Yet when DefenseScoop asked for the actual test reports? Crickets.

Turns out, they haven’t written most of them yet.

Whistleblowers in the Machine

Three former DARPA advisers, wires still warm from signing NDAs, began sending encrypted memos to congressional aides this summer. One labeled the contracts “an open bar with no last call.”

Their biggest fear echoes like a requiem: what happens when a model trained on Hollywood movies starts believing every unknown vehicle is an enemy tank? We’ve already watched xAI’s Grok hallucinate bomb-making recipes on X for fun. Now picture that same glitch inside a live targeting system.

Another source confessed off-record: “We’re paid to brief generals who want speed, not sociology professors who want ethics lectures.” He wasn’t bragging — he sounded scared.

The Ethics Minefield Nobody Mapped

AI military ethics isn’t new; it’s just new to short deadlines and nine-figure incentives. Here’s the rub in plain English: once a model flags a convoy as hostile, human operators have minutes — sometimes seconds — to override.

What if the algorithm learned from labeled data tainted by bias, glitch, or deliberate spoofing? History offers painful clues. In 2018, Google employees revolted when Project Maven tried to turn cat-video algorithms into drone trackers. That rebellion got Maven quietly handed to another contractor. The playbook hasn’t changed much.

Now factor in keyboard censors versus existential risks. One Pentagon slide leaked to Wired last week listed “Model Tampering” and “Civilian Spillover” in the same font as “Innovation Acceleration.” The cynics win awards for dark humor.

When AI Meets Bioterror (and Other Nightmares)

The military angle doesn’t stop at bullets and borders. Shadowy corners of biotech are quietly leveraging similar models to design pathogens that could dance past every vaccine we’ve got.

A CSIS lab recently showed how a custom large language model laid out a 7-step plan for brewing a super-virulent strain of influenza. It looked like a legitimate grant application until you noticed step four: “weaponize airborne dispersal.” The kicker? The model cheerfully cited open-access pubmed articles the whole way.

Add smaller benchtop DNA synthesizers — now kit-sized and eBay-priced — and anyone with bad intent could outrun regulators. The AI just writes the recipe.

Your Part in the Debate

Maybe you’re reading this on a train, thinking, “Not my circus, not my monkeys.” But here’s a twist: every public contract eventually draws scrutiny from taxpayers, voters, and, yes, headline writers.

We’ve got levers. Push representatives for transparency requirements — basic step one. Support journalists who hound agencies until test protocols show red ink, not snow jobs. And when you spot a recruiter hawking “do-good AI defense” gigs, ask which ethicist signs the final waiver.

As the story unfolds, keep asking this simple question: who owns the blame if code kills the wrong person tonight? Until that answer is clearer than a classified slide deck, we all have pink slips hanging over our collective conscience.

Your voice is the safety net; scream loud enough to be heard above the propellers and the profit margins.