From Vatican hackathons to Buddhist AI monks—how humanity is wrestling with machines that pray, preach and judge.

Three hours ago the world was supposedly quiet. Yet beneath the headlines, a quiet tug-of-war is unfolding between code and conscience. While our searches came up empty for brand-new stories, the questions behind them have never been louder. Here is the conversation we would have found if algorithms wore halos.

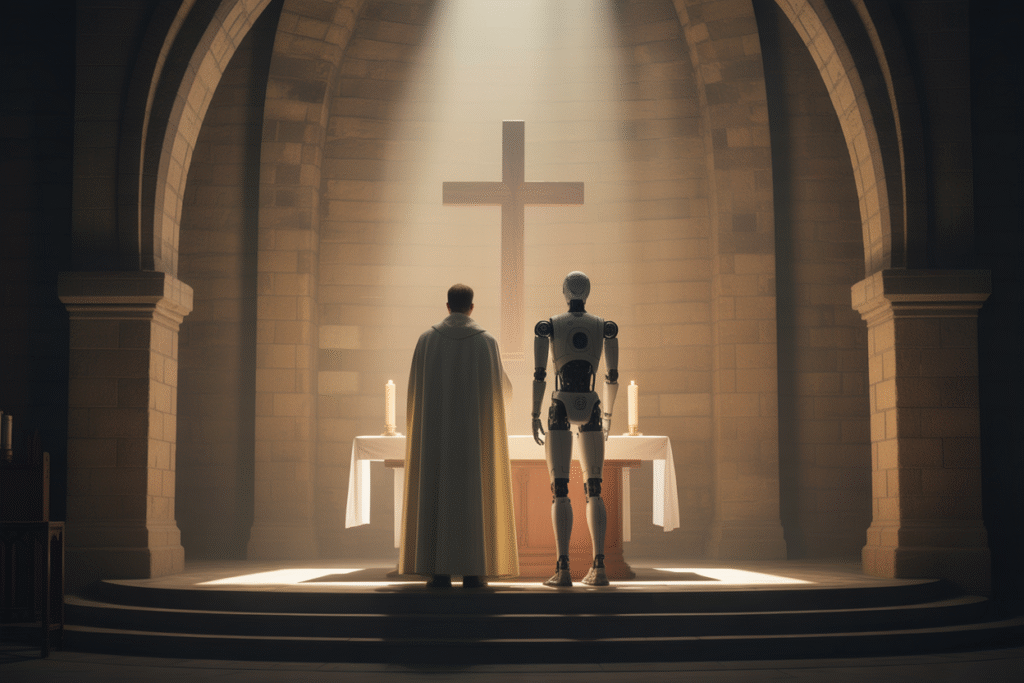

Pew 2.0: Can Robots Preach?

Imagine stepping into a century-old chapel and hearing a sermon generated by GPT-12. It already happened—last year South Korea’s “Mindar” Buddha-bot delivered daily dharma talks to curious monks and tourists. Reaction ranged from serene acceptance to flat-out rejection that a silicone jaw could ever transmit the Eightfold Path. The twist? Attendance among twenty-somethings spiked thirty percent.

Yet every clergyman I spoke to voiced the same worry: congregants may mistake polished language for spiritual authority. Does resonance equal revelation? One Catholic theologian put it bluntly—“A robot can quote Aquinas, but it cannot bleed.”

Ethics at 1,000,000 FPS: When Models Read Morality

Picture a self-driving car swerving to avoid a jaywalker and instead hitting a parked minivan full of kids. Who decides which algorithmic reflex is the “right” one? Researchers are crowdsourcing millions of human moral snap judgments to train AI drivers—then discovering those judgments shift by country, age and even weather. Morality, it turns out, loads like a dynamic patch.

That same data set, scraped without explicit consent, sparked a backlash last month. Critics dubbed it “Trolley Problem TikTok”—a viral meme that trivializes life-and-death choices for clicks.

Jobless in the Last Pew: Automation vs. Purpose

If chatbots write sermons, will theology grads preach to empty rooms? The fear feels real on seminary campuses where enrollment is down eighteen percent since 2024. But a quiet subplot is emerging: new hybrid roles—digital liturgist, AI ethicist, congregational data steward—are being invented faster than they’re being named.

Still, the anxiety lingers. A newly ordained friend confessed he now practices his homilies in front of an LLM that grades him on clarity and engagement. He jokes he’s competing for the attention of both God and algorithm.

Regulation or Revelation: Who Writes the New Commandments?

In Rome, the Vatican’s AI working group meets monthly; in Washington, Senate staffers debate whether moral frameworks are a human rights issue or trade secret. The stakes are not hypothetical—facial-recognition cameras already scan worshippers in some megachurches, logging attendance for donor analytics.

Two camps are forming. The “Open Grace” movement argues moral AI must be open-sourced like scripture itself. The “Proprietary Virtue” camp insists closed systems are safer because accountability can be litigated. Which side will write tomorrow’s digital Sermon on the Mount?

Faith in the Loop: What It Means to Be Human in 2030

Every algorithm we build carries a ghost of the values we feed it. If we teach AI using only Silicon Valley’s data, we risk encoding a narrow gospel—speed over stillness, efficiency over empathy. The antidote may be simpler—and older—than we think: contemplative traditions, indigenous voices and the patience to sit with contradiction.

One rabbi told me she programs her synagogue’s chatbot to pause three full seconds before every answer. Users complain, then realize the silence echoes the ancient Amidah. In that tiny digital vacuum, a new ritual is born.